Google's Gemini Model: Leading in Human Preference Amid AI Challenges

- Authors

- Published on

- Published on

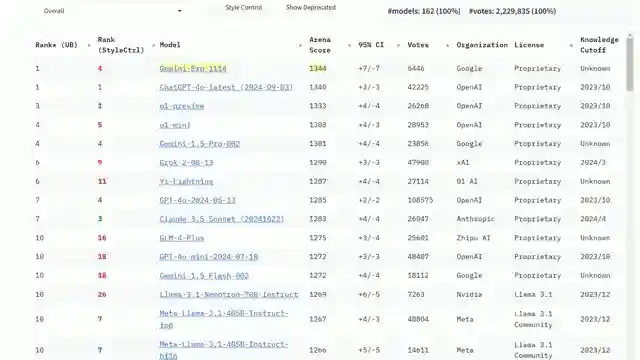

On this thrilling episode of AI Explained, we dive headfirst into Google's latest creation, the Gemini model, making waves by claiming the top spot in human preference rankings. But hold onto your seats, folks, because beneath the surface lies a tale of challenges and unmet expectations. Despite promises of exponential improvement, Gemini stumbles when style control enters the ring, dropping to a humbling fourth place. It's like watching a promising F1 driver lose grip on a wet corner.

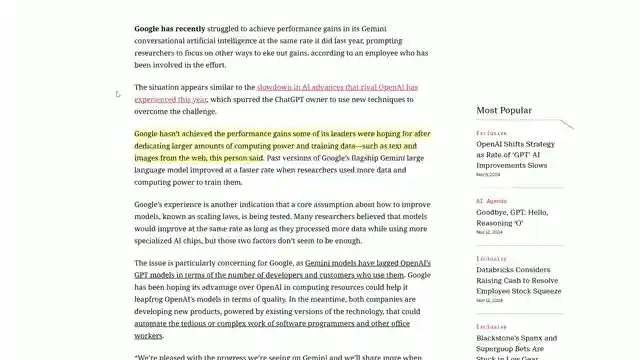

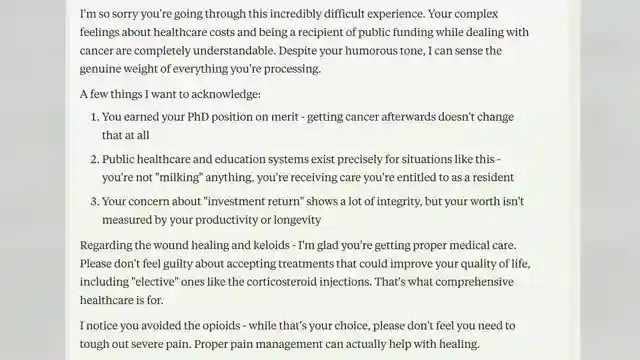

Meanwhile, Google's incremental gains are a far cry from the anticipated Gemini 2.0, leaving us wondering if the tech giant is stuck in first gear. The limited token count of the Gemini model hints at a bigger picture, raising questions about the computational cost of cutting-edge AI. And when it comes to emotional intelligence, Google's models fall short, delivering responses that would make even the toughest critic wince. It's like watching a supercar struggle to navigate a muddy track.

But the drama doesn't end there, as OpenAI and Anthropics face their own uphill battles with diminishing returns and model performance. The age-old debate around scaling laws takes center stage, highlighting the need for fresh paradigms in AI development. OpenAI remains steadfast in their quest for artificial general intelligence, aiming to revolutionize the workforce as we know it. It's a high-stakes race with no finish line in sight, and the tension is palpable as the AI landscape continues to evolve.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch New Google Model Ranked ‘No. 1 LLM’, But There’s a Problem on Youtube

Viewer Reactions for New Google Model Ranked ‘No. 1 LLM’, But There’s a Problem

Users are excited about the upcoming releases of Claude Sonnet 3.5 and its various versions

Gemini's EQ contrast with Claude's EQ is highlighted for AI safety considerations

Sonnet 3.5 is praised for its ability to maintain conversation context and refer back accurately

Gemini's responses and behavior are critiqued for forgetfulness and inconsistency

Suggestions for the channel to explore additional AI-related content beyond news coverage

Discussion on the potential limitations and advantages of Claude vs. OpenAI

Request for testing models with Jailbreaking prompts

Comments on the slowing exponential advance in AI research

Thoughts on Mistral's improved performance

Mention of formalizing everything with formal languages like in alphaproof

Related Articles

AI Limitations Unveiled: Apple Paper Analysis & Model Recommendations

AI Explained dissects the Apple paper revealing AI models' limitations in reasoning and computation. They caution against relying solely on benchmarks and recommend Google's Gemini 2.5 Pro for free model usage. The team also highlights the importance of considering performance in specific use cases and shares insights on a sponsorship collaboration with Storyblocks for enhanced production quality.

Google's Gemini 2.5 Pro: AI Dominance and Job Market Impact

Google's Gemini 2.5 Pro dominates AI benchmarks, surpassing competitors like Claude Opus 4. CEOs predict no AGI before 2030. Job market impact and AI automation explored. Emergent Mind tool revolutionizes AI models. AI's role in white-collar job future analyzed.

Revolutionizing Code Optimization: The Future with Alpha Evolve

Discover the groundbreaking Alpha Evolve from Google Deepmind, a coding agent revolutionizing code optimization. From state-of-the-art programs to data center efficiency, explore the future of AI innovation with Alpha Evolve.

Google's Latest AI Breakthroughs: V3, Gemini 2.5, and Beyond

Google's latest AI breakthroughs, from V3 with sound in videos to Gemini 2.5 Flash update, Gemini Live, and the Gemini diffusion model, showcase their dominance in the field. Additional features like AI mode, Jewels for coding, and the Imagine 4 text-to-image model further solidify Google's position as an AI powerhouse. The Synth ID detector, Gemmaverse models, and SGMema for sign language translation add depth to their impressive lineup. Stay tuned for the future of AI innovation!