Exploring OpenAI's Language Model Progress and Future Innovations

- Authors

- Published on

- Published on

In this thrilling episode of AI Explained, we delve into the recent bombshell from OpenAI about a potential slowdown in language model progress. The team at OpenAI is grappling with the core model GPT-4, eyeing the promising successor Orion. However, the rate of improvement from GPT-3 to GPT-4 seems to have hit a roadblock, leaving experts puzzled. While Orion shows sparks of brilliance, the looming challenge lies in scaling up these models due to data scarcity and soaring costs.

Amidst the uncertainty, the CEO of OpenAI tantalizes us with hints of groundbreaking advancements, including the audacious goal of solving physics using AI. On one hand, there are concerns raised by investors and analysts about a possible plateau in the performance of large language models. Yet, on the other hand, there's a glimmer of hope as OpenAI's CEO paints a picture of a future brimming with possibilities, hinting at monumental leaps forward in AI capabilities.

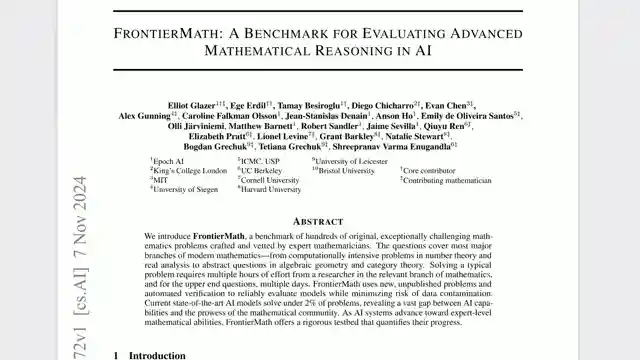

The discussion takes a riveting turn towards the Frontier Math paper, revealing the stark limitations of current AI models when faced with complex mathematical challenges. The key to unlocking further progress lies in data efficiency, a crucial factor in overcoming the hurdles in solving intricate problems. Despite the uncertainties surrounding future scaling, there's a sense of optimism in the air, especially regarding advancements in other AI modalities such as video and image processing.

As the episode draws to a close, viewers are treated to an AI-generated segment that encapsulates the essence of the ongoing AI saga. The anticipation builds as OpenAI gears up to unveil Sora, the much-anticipated video generation model, hinting at a future where AI continues to push boundaries and redefine possibilities. The journey through the intricate world of AI leaves us on the edge of our seats, eagerly awaiting the next chapter in the ever-evolving realm of artificial intelligence.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Leak: ‘GPT-5 exhibits diminishing returns’, Sam Altman: ‘lol’ on Youtube

Viewer Reactions for Leak: ‘GPT-5 exhibits diminishing returns’, Sam Altman: ‘lol’

Terrence Tao's acknowledgment of a difficult problem

Skepticism towards Sam Altman's AI hype

Importance of research papers in the AI field

Concerns about the reliability of AI progress

Discussion on the limitations of current AI models

Balancing hype and skepticism in AI journalism

Speculation on the future of AGI

Importance of real-world knowledge in AI development

Nuanced views on AI progress

Concerns about the overhype of AI advancements

Related Articles

AI Limitations Unveiled: Apple Paper Analysis & Model Recommendations

AI Explained dissects the Apple paper revealing AI models' limitations in reasoning and computation. They caution against relying solely on benchmarks and recommend Google's Gemini 2.5 Pro for free model usage. The team also highlights the importance of considering performance in specific use cases and shares insights on a sponsorship collaboration with Storyblocks for enhanced production quality.

Google's Gemini 2.5 Pro: AI Dominance and Job Market Impact

Google's Gemini 2.5 Pro dominates AI benchmarks, surpassing competitors like Claude Opus 4. CEOs predict no AGI before 2030. Job market impact and AI automation explored. Emergent Mind tool revolutionizes AI models. AI's role in white-collar job future analyzed.

Revolutionizing Code Optimization: The Future with Alpha Evolve

Discover the groundbreaking Alpha Evolve from Google Deepmind, a coding agent revolutionizing code optimization. From state-of-the-art programs to data center efficiency, explore the future of AI innovation with Alpha Evolve.

Google's Latest AI Breakthroughs: V3, Gemini 2.5, and Beyond

Google's latest AI breakthroughs, from V3 with sound in videos to Gemini 2.5 Flash update, Gemini Live, and the Gemini diffusion model, showcase their dominance in the field. Additional features like AI mode, Jewels for coding, and the Imagine 4 text-to-image model further solidify Google's position as an AI powerhouse. The Synth ID detector, Gemmaverse models, and SGMema for sign language translation add depth to their impressive lineup. Stay tuned for the future of AI innovation!