Google Unveils Gemma Models: Advancing Tech with Multimodal Capabilities

- Authors

- Published on

- Published on

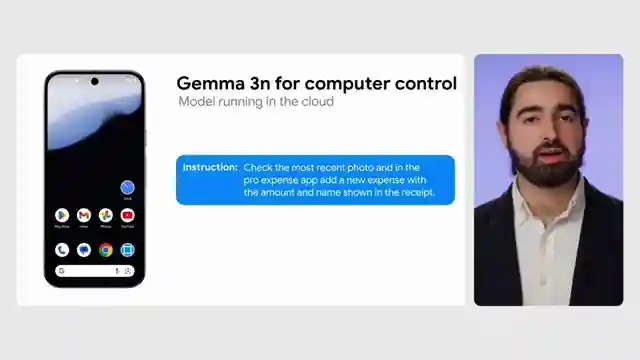

In the thrilling world of tech, Google has unveiled a lineup of new Gemma models at Google IO, including the attention-grabbing Gemma 3N models tailored for mobile applications. These cutting-edge models are open-source, allowing users to easily customize and fine-tune them for their specific needs. The standout feature of these models is their multimodal capability, catering not only to images but also to audio data. The Gemma 3N models have already showcased impressive demos, hinting at their vast potential for innovation and creativity in the tech sphere.

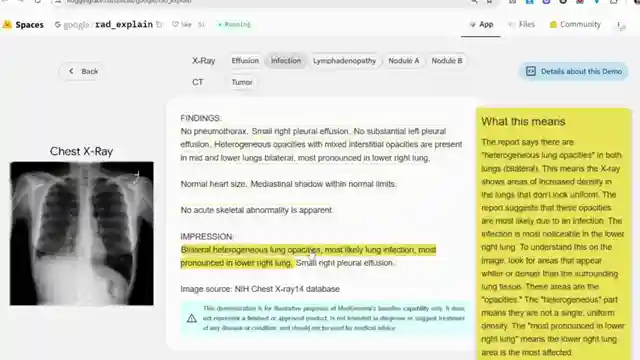

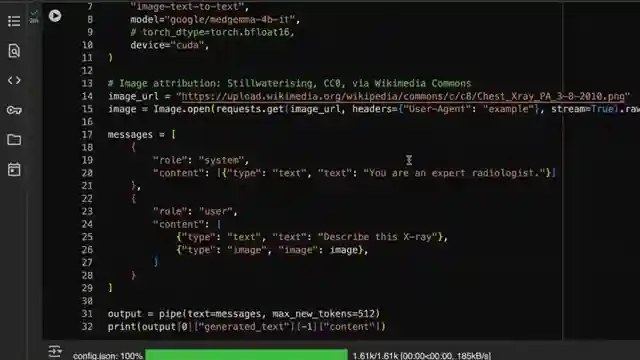

But wait, there's more! Enter the Med Gemma models, a fascinating addition to Google's lineup. These models come in two versions: a 4B multimodal model and a 27B text-only model, both specializing in medical text and image analysis. This marks a significant shift towards specialized models designed for specific tasks, such as medical diagnostics. The Medgema models, utilizing the same architecture as the Gemma 3 models, offer a glimpse into the future of open models catching up with state-of-the-art proprietary models.

What sets Medgema apart is its accessibility to the public, unlike previous proprietary models that were limited to researchers. Google has generously provided notebooks and code for users to fine-tune these models, opening up a world of possibilities for research and product development. The Medgema models excel in tasks like medical question-answering, showcasing their superiority over previous large models in terms of accuracy and performance. By leveraging pre-trained models and customizing them for specific applications, researchers can achieve groundbreaking results, propelling the tech industry into a new era of innovation and advancement.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch MedGemma - An Open Doctor Model? on Youtube

Viewer Reactions for MedGemma - An Open Doctor Model?

User impressed with the imaging exam results after testing the model

User advocating for similar models in various disciplines like engineering and chemistry

User excited about the privacy aspect of the model being offline

User from Canada interested in using the model for a quick and safe second review

Concern about potential resistance from doctors and nurses towards using such applications

Mention of smaller models overfitting faster than bigger models

Emphasis on accepting liability and risk for a product to be considered good

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.