Efficient Data Pipeline Techniques: Python Pandas Optimization

- Authors

- Published on

- Published on

In this thrilling episode by IBM Technology, we dive headfirst into the adrenaline-pumping world of data pipelines. These crucial systems are the lifeblood of any data-driven company, but all too often, they buckle under pressure and waste valuable resources. The team at IBM Technology reveals the secrets to building data pipelines that can handle massive amounts of data without breaking a sweat. It's a high-octane race against time as AI models and big data demand real-time processing, pushing these pipelines to their limits.

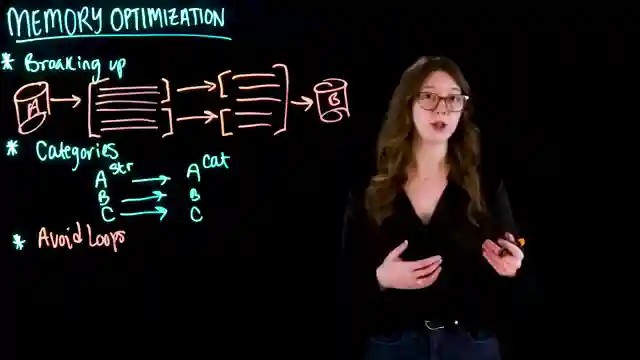

The key to success lies in optimizing memory usage and ensuring continuous operation. By chunking data into smaller pieces during extraction and transforming string data into categories, these pipelines become lean, mean data-processing machines. The team warns against the dangers of recursive logic and loops, advocating for pre-built aggregation functions for maximum efficiency. Monitoring memory usage is crucial to avoid crashes and maintain peak performance as data complexity grows.

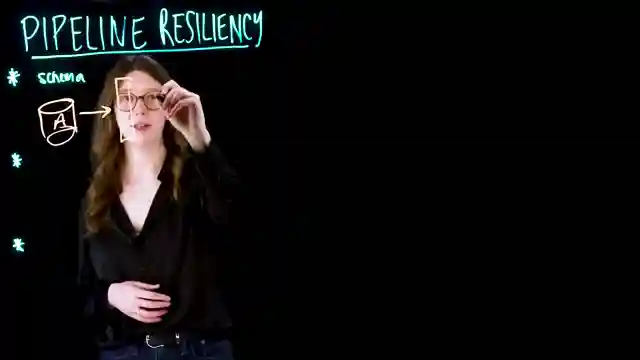

But that's not all - the adrenaline really kicks in when discussing failure control. The team emphasizes the importance of preparing pipelines to automatically restart in case of failure, without any manual intervention. By implementing schema controls and checkpointing, data quality is ensured, and progress can be tracked even in the face of interruptions. With retry logic built into each phase of the pipeline, small failures are handled seamlessly, ensuring a smooth journey from start to finish. By following these best practices, data pipelines are equipped to scale with the demands of AI and big data, ready to conquer whatever challenges come their way.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Scaling Data Pipelines: Memory Optimization & Failure Control on Youtube

Viewer Reactions for Scaling Data Pipelines: Memory Optimization & Failure Control

Memory Optimization techniques discussed:

- Using chunking for data processing

- Converting string data to categorical data

- Utilizing built-in Pandas functions for aggregation

Failure Control strategies mentioned:

- Implementing schema validation

- Adding retry logic

- Using checkpointing for progress tracking

Emphasis on the importance of these techniques for handling big data and AI demands.

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.