Deep Hermes 3 Review: Toggling Thinking Modes and Unconventional Tests

- Authors

- Published on

- Published on

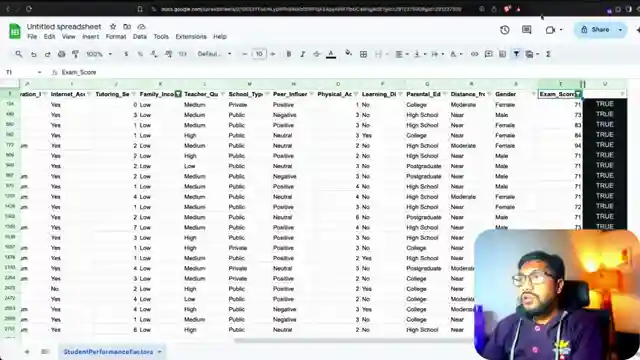

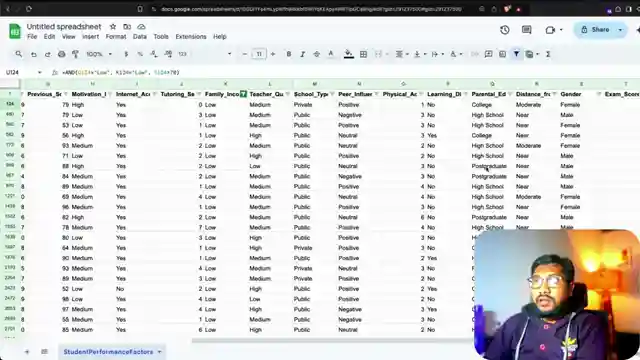

Deep Hermes 3, a model that toggles between thinking and non-thinking modes at the flick of a switch. The team dives into the nitty-gritty, praising its performance despite being rooted in Llama 3.1 rather than the more advanced Quinn. They embark on a series of unconventional tests, pushing the model to its limits. From solving Google Sheets formulas to tackling Wolfram Alpha equations, the team showcases the model's prowess in reasoning and problem-solving.

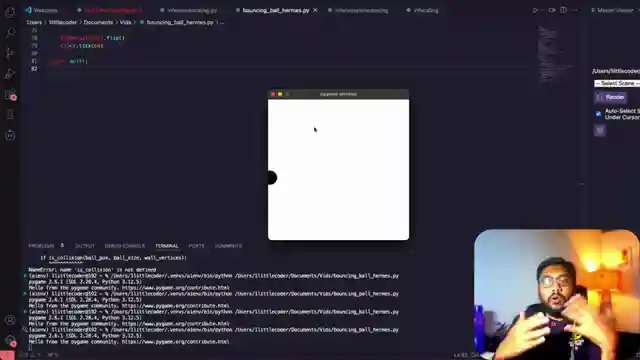

However, not all is smooth sailing for Deep Hermes 3. When tasked with coding a bouncing ball within a rotating hexagon, the model falters, showcasing its limitations in certain scenarios. Despite this setback, the team presses on, exploring the model's accuracy in identifying chemistry compounds. Deep Hermes 3 shines in this challenge, outperforming other models and delivering precise results with finesse.

As the tests continue, the team delves into the model's predictive abilities in medical symptom analysis and problem-solving in the realm of competitive exams. While Deep Hermes 3 impresses with its accuracy in certain tasks, it stumbles in others, revealing the complexities and nuances of AI models. Through a mix of praise and critique, the team paints a vivid picture of Deep Hermes 3's strengths and weaknesses, showcasing the dynamic nature of cutting-edge AI technology.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Local AI Just Got Crazy Smart—And It’s Only 8B Thinking LLM! on Youtube

Viewer Reactions for Local AI Just Got Crazy Smart—And It’s Only 8B Thinking LLM!

Viewers appreciate the in-depth evaluation of small models

Channel is gaining popularity and nearing 100k subscribers

Suggestions for improving video quality such as lighting and exposure

Comparison with other models like Llama R1 Distilled and potential for larger versions of the model

Comments on the model's strengths in logical reasoning and creative output

Questions about the model's output tokens and potential for short responses

Interest in using local AI for privacy and organization data

Potential for models with agentic capabilities in powering game NPCs

Requests for information on offline version capabilities and function calling

Comparisons with other models like Yi-Coder-9B-Chat and Claude Sonnet 3.5

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.