Decoding Algorithmic Fairness: Challenges and Real-World Implications

- Authors

- Published on

- Published on

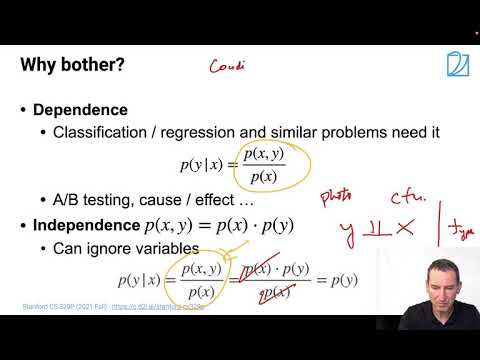

In this riveting discussion, the Alex Smola crew delves into the treacherous world of fairness criteria for classifiers. They uncover a Pandora's box of challenges, showcasing the Herculean task of satisfying multiple requirements simultaneously. Papers by the likes of Kleinberg, Mulinathan, Ragavan, and the illustrious Julie Cover shine a blinding light on the complexities of achieving well-calibrated classifiers within and between groups. It's a rollercoaster ride through the maze of algorithmic fairness, where the team unearths the harsh reality that designing a flawless classifier is akin to finding a needle in a haystack.

As they dissect Hutchinson and Mitchell's vintage 1971 diagram, the team exposes the intricate trade-offs involved in meeting different fairness criteria. Enter the Pokemon Theorem, a groundbreaking revelation that shatters any illusions of achieving perfection in matching distributions across all statistics. Verma and Rubin's 2018 masterpiece expands the horizon, exploring a menagerie of fairness definitions ranging from calibration to conditional demographic disparity. Each criterion presents a unique challenge, painting a vivid picture of the labyrinthine nature of algorithmic fairness.

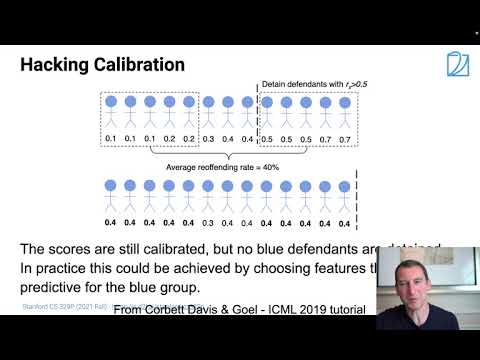

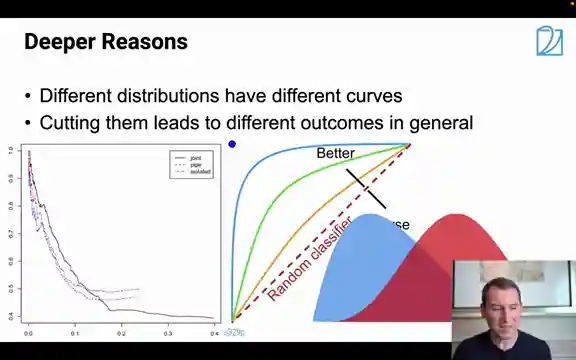

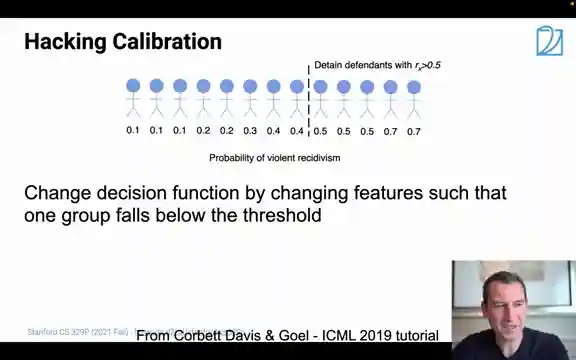

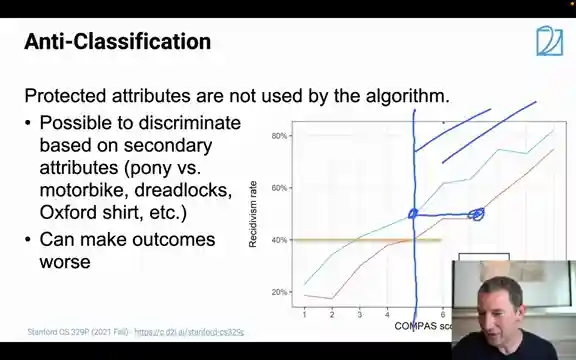

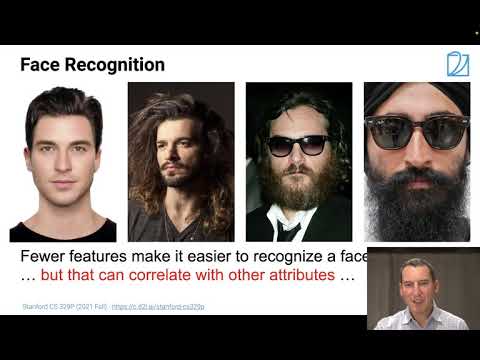

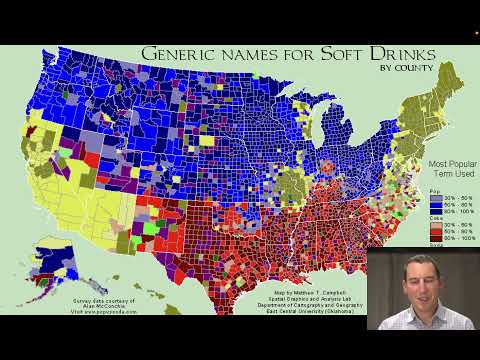

Corbett-Davies and Goel's eye-opening study on risk scores for black and white defendants serves as a stark reminder of the real-world implications of manipulating features in classifiers. The revelation that discrepancies in curves can lead to vastly different outcomes sends shockwaves through the discussion, prompting a call for meticulous scrutiny of data to ensure fairness prevails. The compass scores debacle serves as a cautionary tale, illustrating how overlooking gender dynamics can inadvertently perpetuate biases in algorithmic outcomes. It's a wild ride through the tumultuous seas of fairness in classifiers, where every decision carries the weight of a thousand consequences.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Lecture 14, Part 3, Fairness in Practice on Youtube

Viewer Reactions for Lecture 14, Part 3, Fairness in Practice

I'm sorry, but I cannot provide a summary without the specific video and channel name. Please provide me with that information so I can assist you effectively.

Related Articles

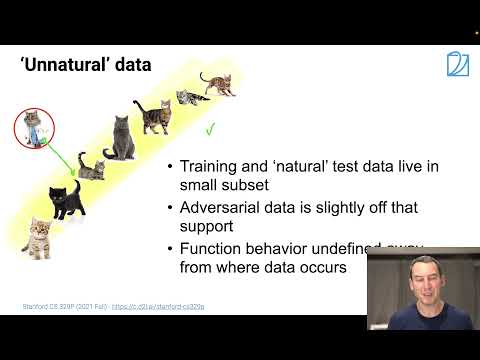

Unveiling Adversarial Data: Deception in Recognition Systems

Explore the world of adversarial data with Alex Smola's team, uncovering how subtle tweaks deceive recognition systems. Discover the power of invariances in enhancing classifier accuracy and defending against digital deception.

Unveiling Coverage Shift and AI Bias: Optimizing Algorithms with Generators and GANs

Explore coverage shift and AI bias in this insightful Alex Smola video. Learn about using generators, GANs, and dataset consistency to address biases and optimize algorithm performance. Exciting revelations await in this deep dive into the world of artificial intelligence.

Mastering Coverage Shift in Machine Learning

Explore coverage shift in machine learning with Alex Smola. Learn how data discrepancies can derail classifiers, leading to failures in real-world applications. Discover practical solutions and pitfalls to avoid in this insightful discussion.

Mastering Random Variables: Independence, Sequences, and Graphs with Alex Smola

Explore dependent vs. independent random variables, sequence models like RNNs, and graph concepts in this dynamic Alex Smola lecture. Learn about independence tests, conditional independence, and innovative methods for assessing dependence. Dive into covariance operators and information theory for a comprehensive understanding of statistical relationships.