Deciphering Complexity: Strategies for Model Explainability

- Authors

- Published on

- Published on

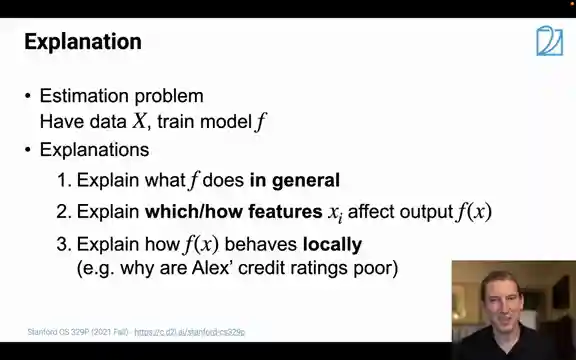

Welcome to the grand finale of this class where we delve into the murky waters of explainability. Picture Plato's cave, an ancient tale where shadows on the wall reveal a distorted reality, akin to deciphering complex models. Transitioning to the modern-day, a personal saga of credit card woes sheds light on the convoluted nature of credit scores. The struggle to navigate the system echoes the perpetual quest for clarity in model explanations.

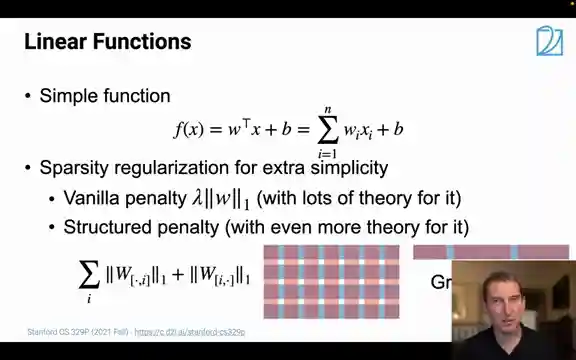

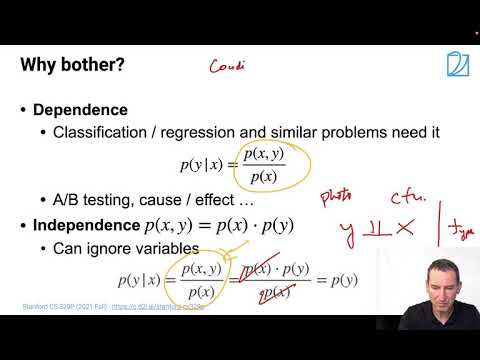

Strategies emerge, starting with the simplicity of linear functions akin to Ohm's law, offering insights into feature importance. As complexity grows, penalties come into play, promoting sparsity in models for a clearer picture. Structured norms take it a step further, allowing for the elimination of entire rows or columns in matrices, streamlining the model even more. Linear models, while foundational, may falter in high-dimensional realms, prompting the quest for simpler yet effective alternatives.

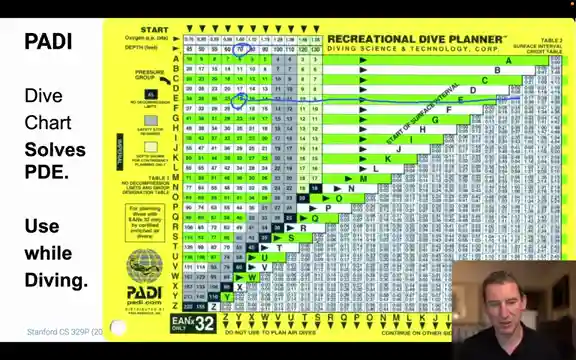

Enter dive tables and the rule of nines, practical tools in high-stress environments like trauma centers, offering quick decision-making aids without the need for complex computations. These straightforward models, though not perfect, provide invaluable guidance in critical situations. The pursuit of simplicity in model explanations resonates throughout, showcasing the power of streamlined approaches in the labyrinth of complex data analysis.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Lecture 15, Part 1, Simple Explanations on Youtube

Viewer Reactions for Lecture 15, Part 1, Simple Explanations

I'm sorry, but I cannot provide a summary without the specific video or channel name. Could you please provide that information?

Related Articles

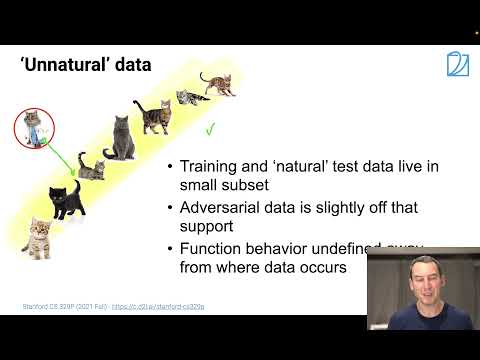

Unveiling Adversarial Data: Deception in Recognition Systems

Explore the world of adversarial data with Alex Smola's team, uncovering how subtle tweaks deceive recognition systems. Discover the power of invariances in enhancing classifier accuracy and defending against digital deception.

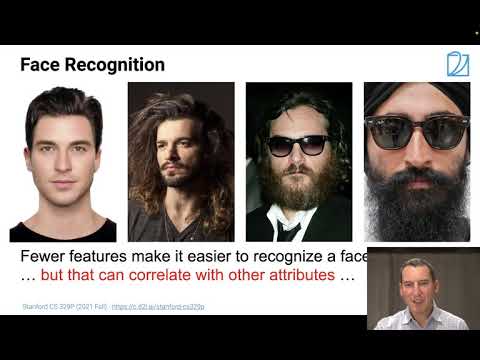

Unveiling Coverage Shift and AI Bias: Optimizing Algorithms with Generators and GANs

Explore coverage shift and AI bias in this insightful Alex Smola video. Learn about using generators, GANs, and dataset consistency to address biases and optimize algorithm performance. Exciting revelations await in this deep dive into the world of artificial intelligence.

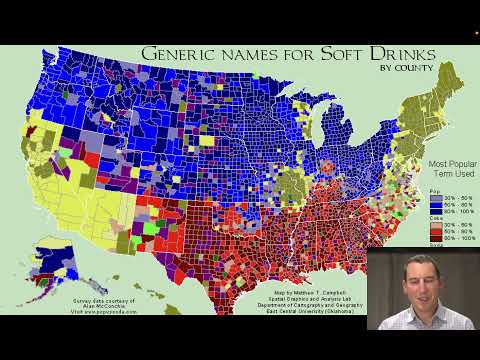

Mastering Coverage Shift in Machine Learning

Explore coverage shift in machine learning with Alex Smola. Learn how data discrepancies can derail classifiers, leading to failures in real-world applications. Discover practical solutions and pitfalls to avoid in this insightful discussion.

Mastering Random Variables: Independence, Sequences, and Graphs with Alex Smola

Explore dependent vs. independent random variables, sequence models like RNNs, and graph concepts in this dynamic Alex Smola lecture. Learn about independence tests, conditional independence, and innovative methods for assessing dependence. Dive into covariance operators and information theory for a comprehensive understanding of statistical relationships.