Cost-Effective Language Model Fine-Tuning with Laura: A Complete Guide

- Authors

- Published on

- Published on

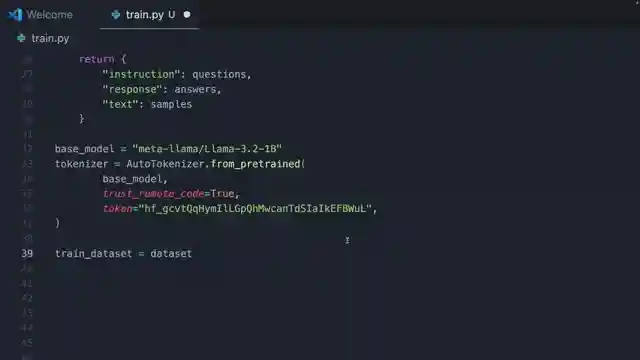

In this riveting episode by Nicholas Renotte, he unveils the secrets of fine-tuning a large language model on a shoestring budget. Renotte introduces us to Laura, a low-rank adaptation that revolutionizes the costly and time-consuming process of full fine-tuning. By injecting specific knowledge without retraining all parameters, Laura offers a cost-effective solution that runs smoothly even on basic laptops. Renotte's demonstration of a rapid end-to-end walkthrough for creating custom training data sets under 60 minutes showcases the power of this innovative approach.

Renotte dives deep into the intricacies of Laura's unique structure, highlighting the significance of the rank hyperparameter in determining the adapter's size. The key lies in striking the perfect balance to avoid unnecessary expenses. By evaluating the language model's comprehension of TM1 through predefined questions, Renotte emphasizes the importance of tailored data sets in enhancing performance. This meticulous approach sets the stage for the next phase: synthetic data generation using Dockling to transform information into an instruction tuning data set.

The process unfolds with Renotte guiding viewers through installing Dockling and other essential modules, setting the stage for generating instruction pairs with a larger LLM model. The meticulous attention to detail and strategic utilization of resources exemplify Renotte's expertise in optimizing language models efficiently. As the episode progresses, viewers are drawn into the fascinating world of fine-tuning language models, demystifying complex concepts and making them accessible to all enthusiasts. Renotte's passion for innovation and cost-effective solutions shines through, inspiring viewers to explore the endless possibilities of fine-tuning with Laura.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to Fine Tune your own LLM using LoRA (on a CUSTOM dataset!) on Youtube

Viewer Reactions for How to Fine Tune your own LLM using LoRA (on a CUSTOM dataset!)

Positive feedback on the video content and pace

Request for information on advantages of fine-tuning over RAG implementation

Collaboration proposal for an AI literacy platform

Request for a video on training a Mario playing agent using Markov decision process

Question about the use of .contextualize() in the video

Inquiry about fine-tuning locally on a Mac GPU

Appreciation for the informative content and preference for long-format videos

Related Articles

Unlocking Seamless Agent Communication with ACP Protocol

Nicholas Renotte introduces ACP, a universal protocol for seamless agent communication. ACP simplifies connecting agents, fostering collaboration across teams and organizations. The blog explores wrapping agents with ACP, chaining multiple agents, and utilizing a prototype ACP calling agent for efficient communication.

Mastering AI Crypto Trading: Strategies, Challenges, and Profitable Bot Building

Nicholas Renotte guides viewers through building an AI-powered crypto trading bot, exploring advantages, challenges, and strategies like sentiment analysis for profitable trades.

Mastering MCP: Connecting Agents to Yahoo Finance & Beyond

Learn how to build an MCP server to connect your agent to Yahoo Finance and more. Nicholas Renotte guides you through setting up the server, fetching stock prices, connecting to an agent, and integrating with tools like Cursor and Langflow for enhanced capabilities.

Revolutionizing AI: Open-Source Model App Challenges OpenAI

Nicholas Renotte showcases the development of a cutting-edge large language model app, comparing it to OpenAI models. Through tests and comparisons, the video highlights the app's capabilities in tasks like Q&A, email writing, and poem generation. Exciting insights into the future of AI technology are revealed.