Building Local Agents with Langra: Unveiling Rome's Best Pizza Secrets

- Authors

- Published on

- Published on

In this thrilling episode from James Briggs, we dive headfirst into the world of building local agents using Langra and the powerful Llama 3.1 8B model. Langra, a creation from the ingenious minds at Lang chain, allows us to construct agents within a dynamic graph structure. Meanwhile, Llama, an open-source gem, provides the means to run LLMs locally with remarkable ease. Forget the mundane, this is where the real action begins.

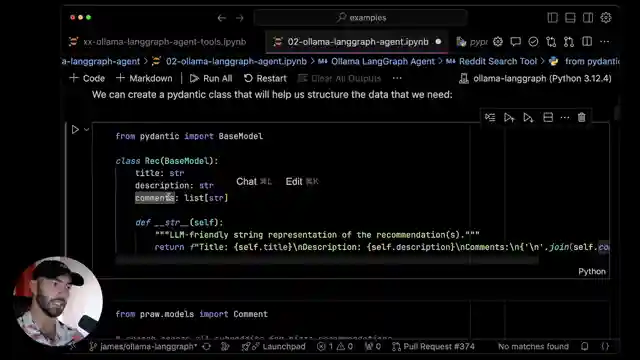

Our journey kicks off with a download of Llama for Mac OS, followed by the setup of a Python environment by cloning the examples repository. Perry is installed, and the stage is set for running the notebook in VS Code. But hold on tight, because things are about to get even more exhilarating. Enter the Reddit API, a gateway to a treasure trove of pizza recommendations in Rome. By signing up and obtaining the necessary keys, users can tap into a world of culinary insights.

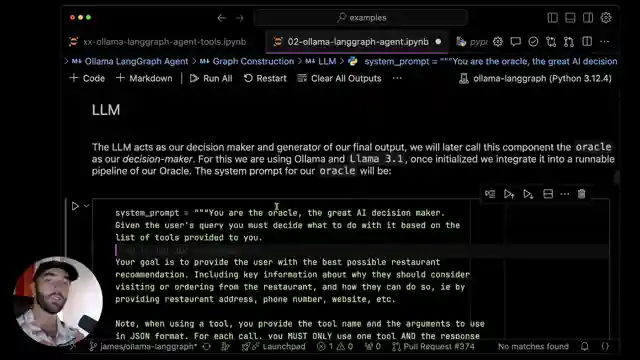

With the Reddit API as our trusty sidekick, we embark on a quest to unearth the best pizza joints in Rome. Armed with the Python Reddit API wrapper, we scour submissions, titles, descriptions, and top-rated comments to curate a feast of information. The retrieved data is deftly formatted for an LM-friendly presentation, setting the stage for the grand reveal of our pizza paradise. As the Oracle component takes the helm, decisions are made based on queries, leading us on a thrilling chase through the labyrinth of search and final answer tools.

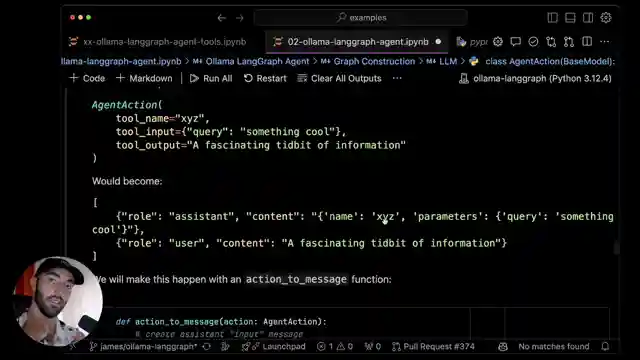

In a bold move, James Briggs champions the direct use of Ol Llama over Line Chain's functions, citing a preference for the former's straightforward approach. The agent's state is primed and ready, drawing on past knowledge shared in a Langra video by the maestro himself. As the agent's architecture unfolds, we witness a symphony of interaction between the Oracle, search, and final answer tools, culminating in a tantalizing recommendation for the ultimate pizza experience in Rome. Brace yourselves, for this is not just a journey—it's a high-octane adventure into the heart of AI innovation.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Local LangGraph Agents with Llama 3.1 + Ollama on Youtube

Viewer Reactions for Local LangGraph Agents with Llama 3.1 + Ollama

Learning from LangGraph videos

Using a local LLM

Creating a RAG

Benchmark between LangGraph and semantic kernel

Issues with tools not working properly

Using LangChain decorator "@tool"

Understanding the role of nodes and edges in LangGraph

Considering using uv instead of Poetry or Conda

Exploring the idea of creating an app for RAG queries on saved YT videos

Building an independent system with own API and graphs

Related Articles

Exploring AI Agents and Tools in Lang Chain: A Deep Dive

Lang Chain explores AI agents and tools, crucial for enhancing language models. The video showcases creating tools, agent construction, and parallel tool execution, offering insights into the intricate world of AI development.

Mastering Conversational Memory in Chatbots with Langchain 0.3

Langchain explores conversational memory in chatbots, covering core components and memory types like buffer and summary memory. They transition to a modern approach, "runnable with message history," ensuring seamless integration of chat history for enhanced conversational experiences.

Mastering AI Prompts: Lang Chain's Guide to Optimal Model Performance

Lang Chain explores the crucial role of prompts in AI models, guiding users through the process of structuring effective prompts and invoking models for optimal performance. The video also touches on future prompting for smaller models, enhancing adaptability and efficiency.

Enhancing AI Observability with Langmith and Linesmith

Langmith, part of Lang Chain, offers AI observability for LMS and agents. Linesmith simplifies setup, tracks activities, and provides valuable insights with minimal effort. Obtain an API key for access to tracing projects and detailed information. Enhance observability by making functions traceable and utilizing filtering options in Linesmith.