AI Deployment Integrity: Ensuring Correct Behavior

- Authors

- Published on

- Published on

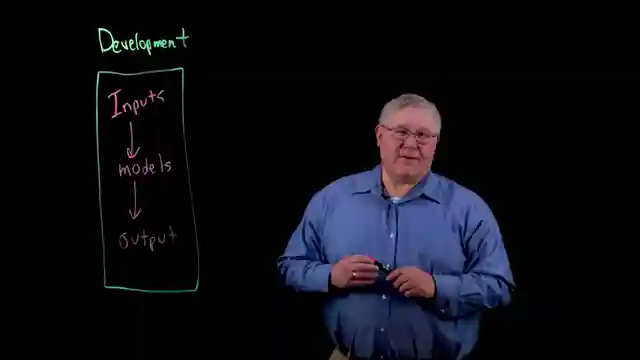

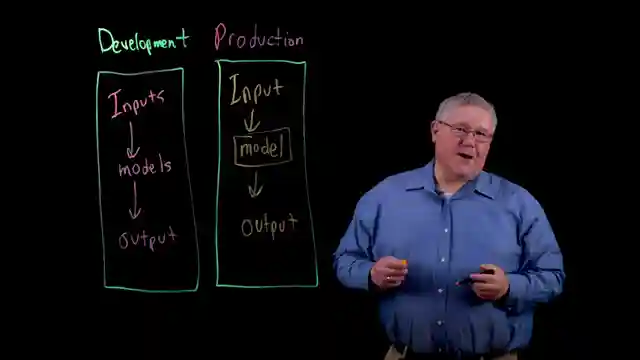

In this thrilling IBM Technology segment, the team delves into the critical task of keeping AI in check. Picture this: data scientists and AI engineers crafting models in a development space akin to a sandbox - a place of creation and perfection. But the real challenge comes when these models are unleashed into the wild, known as the production space. How do we ensure they don't go off the rails like a runaway train?

Well, fear not, for the team lays out three ingenious methods to maintain AI sanity. Firstly, by comparing the model's output to ground truth, they can swiftly spot any deviations from the desired path. Secondly, a clever comparison between deployment and development outputs acts as a beacon, guiding them back on course. And let's not forget the nifty use of flags and filters to sift through the AI's output like a seasoned detective, weeding out any unwanted surprises.

It's a high-stakes game of precision and vigilance, where even the slightest deviation can spell disaster. But armed with these three powerful methods, the team stands ready to tackle any challenges that come their way. So buckle up, folks, as we embark on this exhilarating journey into the world of AI integrity and control.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Building Trustworthy AI: Avoid Model Drift and Unsafe Outputs on Youtube

Viewer Reactions for Building Trustworthy AI: Avoid Model Drift and Unsafe Outputs

Viewer enjoys drinking coffee while watching IBM AI topic videos

Comments thanking for the clear and helpful explanations

Positive feedback on the examples provided

Celebration emoji and heart

Confusion about a sudden change in language during the use of Groq-3 on X

Mention of using gpt4o for assistance in communication

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.