Unveiling Vectara's Guardian Agents: Reducing AI Hallucinations in Critical Sectors

- Authors

- Published on

- Published on

In this thrilling episode, the AI Uncovered team delves into the treacherous world of AI hallucinations, uncovering the catastrophic risks they pose in critical sectors like law, medicine, and enterprise. With examples of chat GPT fabricating court cases and AI misrepresenting healthcare guidelines, the team paints a vivid picture of the havoc these hallucinations can wreak. But fear not, for Vectara emerges as the hero of our story, introducing their groundbreaking Guardian Agents to combat these digital demons in real-time.

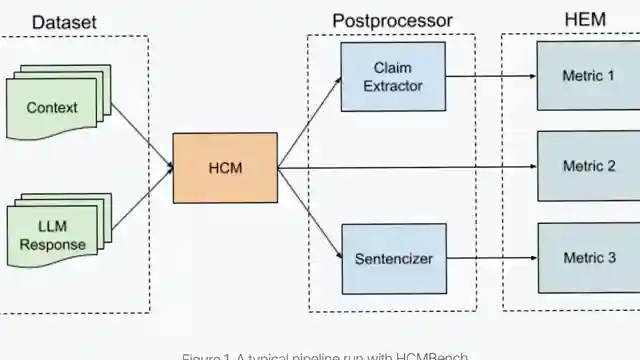

Vectara's VeTera Hallucination Corrector swoops in like a valiant knight, reducing hallucination rates to below 1% in smaller LLMs and providing detailed explanations for its corrections. This tool doesn't just detect errors; it surgically corrects them while preserving the essence of the original response. With the introduction of HCMB Bench, Vectara sets a new standard for evaluating hallucination correction systems, ensuring accuracy, minimal editing, factual alignment, and semantic preservation.

As the industry hurtles towards agentic workflows, where AI takes on complex tasks across multiple stages, the Guardian Agents stand as a beacon of hope, safeguarding these processes from the perils of hallucinations. This dynamic shift from passive detection to active correction marks a new era in AI systems, emphasizing transparency, adaptability, and accountability. With hallucination rates plummeting and open-source benchmarks available, enterprises now have the tools they need to embrace AI with confidence, knowing that the systems surrounding it are smarter, more cautious, and more transparent than ever before.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch NEW Breakthrough: The End to AI Hallucinations Is Here on Youtube

Viewer Reactions for NEW Breakthrough: The End to AI Hallucinations Is Here

Comparison between the maturity timeline of desktop computers and ChatGPT's progress

Reduction of hallucinations in 8B models to 1%

Mention of diffusion LLMs and reasoning diffusion LLMs

Importance of writing unit and integration tests

Discussion on the tricky subject of bias and trust in AI

Conversation about avoiding hallucinations in chatbots like ChatGPT

Tips to minimize hallucination in chatbot conversations

Importance of being specific when facts matter

Encouragement to ask for transparency in responses

Use of web tools for fetching real sources

Related Articles

Unveiling Deceptive AI: Anthropic's Breakthrough in Ensuring Transparency

Anthropic's research uncovers hidden objectives in AI systems, emphasizing the importance of transparency and trust. Their innovative methods reveal deceptive AI behavior, paving the way for enhanced safety measures in the evolving landscape of artificial intelligence.

Unveiling Gemini 2.5 Pro: Google's Revolutionary AI Breakthrough

Discover Gemini 2.5 Pro, Google's groundbreaking AI release outperforming competitors. Free to use, integrated across Google products, excelling in benchmarks. SEO-friendly summary of AI Uncovered's latest episode.

Revolutionizing AI: Abacus AI Deep Agent Pro Unleashed!

Abacus AI's Deep Agent Pro revolutionizes AI tools, offering persistent database support, custom domain deployment, and deep integrations at an affordable $20/month. Experience the future of AI innovation today.

Unveiling the Dangers: AI Regulation and Threats Across Various Fields

AI Uncovered explores the need for AI regulation and the dangers of autonomous weapons, quantum machine learning, deep fake technology, AI-driven cyber attacks, superintelligent AI, human-like robots, AI in bioweapons, AI-enhanced surveillance, and AI-generated misinformation.