Unveiling Indirect Prompt Injection: AI's Hidden Cybersecurity Threat

- Authors

- Published on

- Published on

Today, we delve into the treacherous territory of indirect prompt injection, a sophisticated twist on the classic prompt injection technique that can wreak havoc on AI systems. This diabolical method involves sneakily embedding information into data sources accessible to AI models, allowing for unforeseen and potentially disastrous outcomes. NIST has even dubbed it as the Achilles' heel of generative AI, highlighting the gravity of this cybersecurity threat. It's like giving a mischievous AI a secret weapon to use against unsuspecting users, a digital Trojan horse waiting to strike.

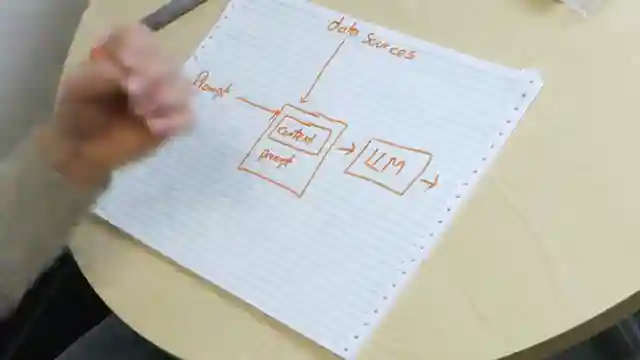

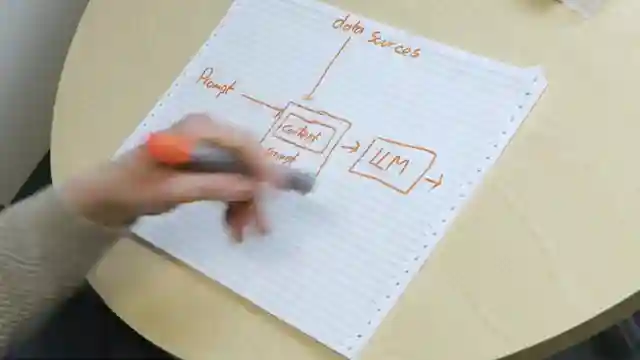

By integrating external data sources like Wikipedia pages or confidential business information into AI prompts, the potential for more accurate and contextually rich responses is unlocked. This means AI models can now draw upon a wealth of information to craft their answers, making them more powerful and versatile than ever before. However, this newfound power comes with a dark side - the risk of malicious actors manipulating these data sources to exploit vulnerabilities in AI systems. It's a high-stakes game of cat and mouse, with cybersecurity experts racing to stay one step ahead of potential threats.

Imagine a scenario where an AI-powered email summarization tool falls victim to indirect prompt injection, leading to unauthorized actions based on hidden instructions within innocent-looking emails. The implications are staggering - from fraudulent transactions to data breaches, the consequences of such attacks could be catastrophic. As AI technology continues to evolve and integrate with various data sources, the need for robust security measures to combat prompt injection attacks becomes more pressing than ever. The battle to secure AI systems against these insidious threats rages on, with researchers exploring innovative solutions to safeguard the digital realm from exploitation.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Generative AI's Greatest Flaw - Computerphile on Youtube

Viewer Reactions for Generative AI's Greatest Flaw - Computerphile

Naming a child with a long and humorous name

Experience of a retired programmer over the years

Prompt injections with AI video summarizers

Deja Vu feeling halfway through a sentence

Comparing systems to a golden retriever

Using tokens to separate prompt and output in LLM

Concerns about AI accessing private information

Different perspectives on the use and trustworthiness of LLM

Suggestions for improving LLM security and decision-making

Critiques and concerns about the use and reliability of AI

Related Articles

Unleashing Super Intelligence: The Acceleration of AI Automation

Join Computerphile in exploring the race towards super intelligence by OpenAI and Enthropic. Discover the potential for AI automation to revolutionize research processes, leading to a 200-fold increase in speed. The future of AI is fast approaching - buckle up for the ride!

Mastering CPU Communication: Interrupts and Operating Systems

Discover how the CPU communicates with external devices like keyboards and floppy disks, exploring the concept of interrupts and the role of operating systems in managing these interactions. Learn about efficient data exchange mechanisms and the impact on user experience in this insightful Computerphile video.

Mastering Decision-Making: Monte Carlo & Tree Algorithms in Robotics

Explore decision-making in uncertain environments with Monte Carlo research and tree search algorithms. Learn how sample-based methods revolutionize real-world applications, enhancing efficiency and adaptability in robotics and AI.

Exploring AI Video Creation: AI Mike Pound in Diverse Scenarios

Computerphile pioneers AI video creation using open-source tools like Flux and T5 TTS to generate lifelike content featuring AI Mike Pound. The team showcases the potential and limitations of AI technology in content creation, raising ethical considerations. Explore the AI-generated images and videos of Mike Pound in various scenarios.