Unveiling Figure's Helix: Advanced Humanoid Robot with Vision Language Model

- Authors

- Published on

- Published on

In the thrilling world of robotics, Figure has unleashed the Helix - a humanoid robot that's not just your average tin can on wheels. No, this bad boy comes packed with a 7 billion parameter Vision language model, making it the chat GPT moment of Robotics. Picture this: a robot that can understand your commands in plain English and execute tasks with the precision of a Swiss watch. It's like having your very own robotic butler, ready to fetch your morning coffee or slice your tomatoes with a flick of its mechanical wrist.

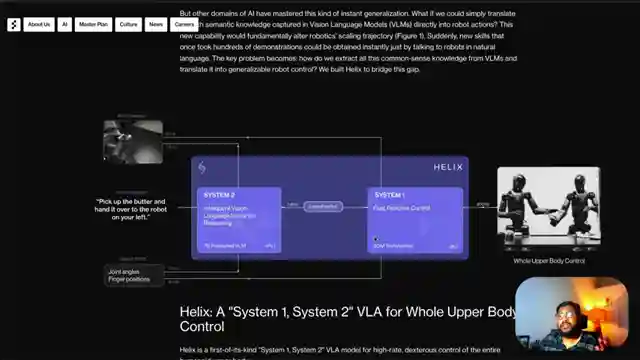

But what sets the Helix apart from the rest is its dual-system setup - a Vision language model and an 80 million Transformer model working in harmony to process information at lightning speed. This isn't your run-of-the-mill robot that needs step-by-step instructions to make a cup of joe. No, the Helix can generalize actions across a myriad of objects and tasks, thanks to its cutting-edge technology. It's like teaching a dog new tricks, only this time, the dog is a high-tech humanoid with the brains to match its brawn.

As we delve deeper into the inner workings of the Helix, we uncover a world where deep neural networks reign supreme. Figure's decision to part ways with OpenAI speaks volumes about their commitment to using open-source models to drive their robotics projects forward. This isn't just about building a robot; it's about revolutionizing the way we interact with technology. The Helix isn't just a robot; it's a glimpse into the future of robotics, where artificial intelligence and human ingenuity collide in a symphony of metal and code.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch This Actual HUMANOID is run by JUST a 7B AI Model!!! on Youtube

Viewer Reactions for This Actual HUMANOID is run by JUST a 7B AI Model!!!

Mention of 7B + 80M parameter models

Discussion on vision-language models and VLA

Impressed reactions to the technology

Doubt expressed about the movements in the video

Comparison to ChatGPT moment for robotics

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.