Unlocking Deep Seek R1: Running Advanced Chinese AI Locally

- Authors

- Published on

- Published on

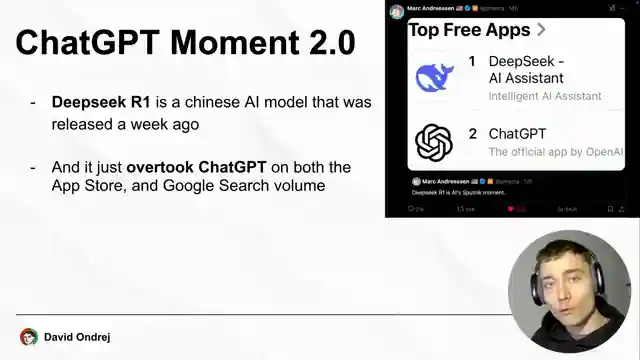

In this riveting episode by David Ondrej, we dive headfirst into the world of AI with the groundbreaking Deep Seek R1 model from China. Surpassing the once-popular Chad GBT, Deep Seek R1 is not only 27 times cheaper than O1 but also boasts a staggering 671 billion parameters. This AI powerhouse has sent shockwaves through the tech industry, causing a massive $600 billion loss for American giants like Nvidia and AMD. But fear not, as Deep Seek R1 offers smaller distilled versions that can run on a variety of computer setups, making its cutting-edge technology accessible to all.

As we witness the global outage at Deep Seek due to its unprecedented popularity, the spotlight shines on the model's reasoning capabilities. Unlike traditional language models, Deep Seek's reasoning models provide insightful responses that outshine the competition. Sam Altman himself acknowledges the impressive feats of Deep Seek, highlighting the healthy competition it brings to the AI arena. But the road ahead is not without its challenges, as OpenAI grapples with adapting to the success of Chinese AI models like Deep Seek.

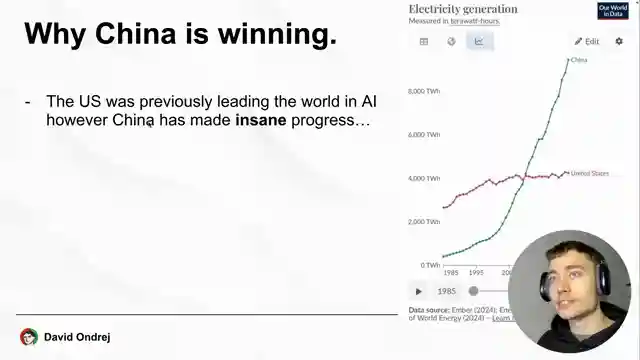

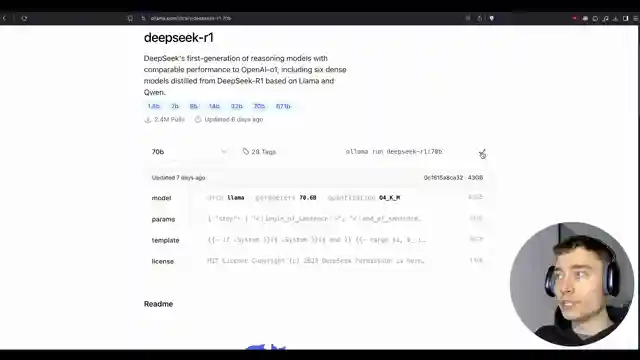

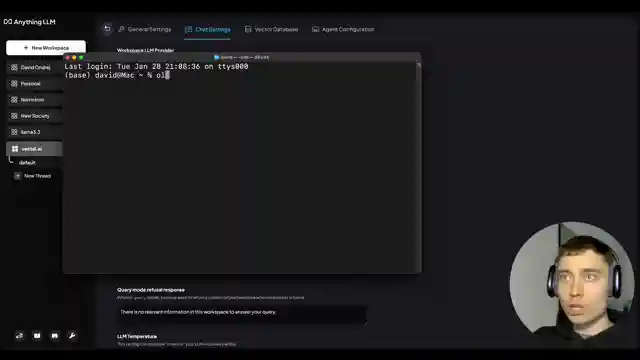

China's rise in the AI race is no coincidence, fueled by abundant energy resources and top-tier talent. Models like Deep Seek leverage reinforcement learning, a powerful training method that sets them apart in the field. Through a step-by-step guide presented in the video, users can easily run Deep Seek R1 on their computers using tools like Vectal and AMA. By embracing open-source models like Deep Seek, users gain unprecedented insight into the inner workings of AI decision-making, ushering in a new era of adaptability and learning in the tech landscape.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to run DeepSeek on your computer (100% private) on Youtube

Viewer Reactions for How to run DeepSeek on your computer (100% private)

Running smaller than 671b parameter model is not running R1

Different base models for various sizes of models

DeepSeek allows running own AI model on own hardware

Interest in building a modular system for AI

Reinforced learning with AI models

Comparison between OpenAI and DeepSeek

Concerns about privacy and data security

Questions about feeding large data to DeepSeek

Interest in setting up text generation tasks with DeepSeek

Concerns about speed and complexity of explanations

Related Articles

Unlocking Gemini CLI: Setup, Comparison, and Optimization Tips

Google's Gemini CLI, an open-source coding agent, offers 1M context window and 1,000 free queries daily. Learn setup tips, compare to Cloud Code, and optimize for top performance. Explore its potential and challenges in the coding realm.

Unleash Coding Power: Pyagura Simplifies App Development

Discover Pyagura, a powerful coding app simplifying app development. From structured processes to user-friendly error resolution, Pyagura guides users to create robust, business-ready apps with ease.

Mastering AI Sales Automation: N8 Workflow and Future Collaboration

Discover the seamless blend of human feedback and AI efficiency in sales automation with N8. Learn about the linear workflow, customization possibilities, and the future of AI-human collaboration. Explore the power of Vectal and Perplexity Pro for enhanced productivity.

Mastering Productivity: The Ultimate Vibe Coding Setup Revealed

Discover the ultimate vibe coding setup with tools like 03 Pro, Cursor, Clot Code, Codex, Super Whisper, and Repo Prompt. Enhance productivity and streamline workflow for coding success.