Perplexity Unveils Uncensored Deep Seek R1 Model R11 1776: A Game-Changer in AI Transparency

- Authors

- Published on

- Published on

Perplexity has unleashed the uncensored Deep Seek R1 version R11 1776, a model that breaks free from the norm. Unlike its counterparts, this beast of a model doesn't rely on obliteration to achieve uncensored glory. No, it blazes its own trail, maintaining top-notch quality without compromise. By identifying and tackling 300 touchy topics head-on, they've crafted a multilingual censorship classifier that's as precise as a Swiss watch. And with a whopping 40,000 prompts dataset, this model is armed to the teeth with knowledge.

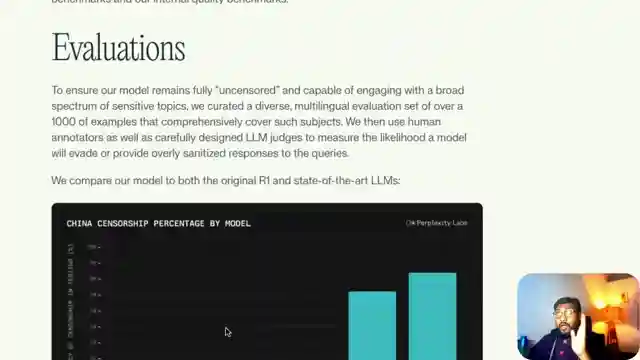

But the real magic happens post-training, where they've harnessed the power of Nvidia's Nemo 2.0 framework to fine-tune this uncensored marvel. The result? A model that stands tall with the least Chinese censoring in the game. And let's not forget the Deep Seek team's bold move of sharing their model weights openly, paving the way for others to follow suit. This new R11 1776 model doesn't shy away from the tough questions, offering factual responses where its predecessors faltered.

With references to 1776, the year of American independence, this model is a true revolutionary in the world of uncensored AI. And the future looks bright, with hints of smaller, more accessible versions on the horizon. So buckle up, folks, because this uncensored Deep Seek R1 is not just a game-changer—it's a freedom fighter in the digital realm. Get ready to witness the power of uncensored AI like never before, all thanks to the daring minds at Perplexity.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Finally! The UNCENSORED Deepseek R1 Open Source! on Youtube

Viewer Reactions for Finally! The UNCENSORED Deepseek R1 Open Source!

Running models locally with specific hardware for better performance

Mention of the historical significance of 1776

Discussion on the uncensored nature of the model and comparisons with other models

Costs of running models on different cloud platforms

Mention of specific models like DeepSeek v3 and R1

Comments on the biases removed from the model

Humorous comments about Gemini's responses

Criticisms of the model's censorship and biases

Mention of topics not covered or limitations of the model

Criticisms of the model being filled with Western propaganda

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.