Optimizing Neural Networks: LoRA Method for Efficient Model Fine-Tuning

- Authors

- Published on

- Published on

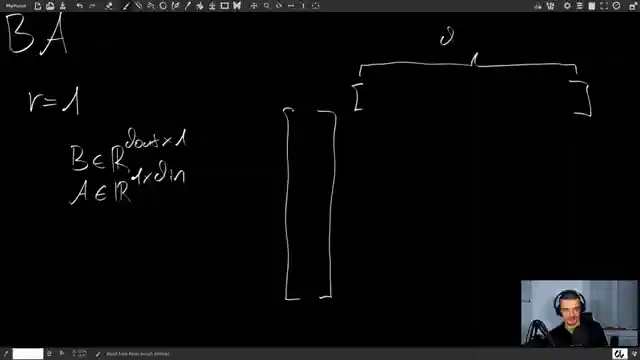

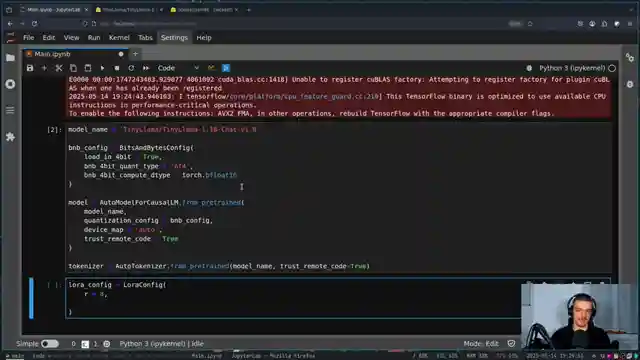

Today on NeuralNine, the team delves into the fascinating world of LoRA, a groundbreaking technique for fine-tuning large language models. LoRA, short for low rank adaptation, revolutionizes neural network optimization by honing in on specific matrices rather than the entire model. By breaking down the difference matrix into smaller components B and A, LoRA streamlines the training process, cutting down on resources and time. This method, based on the assumption of low intrinsic rank, allows for training only these compact matrices, resulting in a remarkable reduction in required space. The team showcases the practical implementation of LoRA in Python, demonstrating its efficiency without the need for extensive mathematical knowledge. They emphasize the adaptability of LoRA even with limited GPU resources, showcasing its potential on platforms like Google Colab notebooks.

The NeuralNine crew elucidates the importance of understanding weights and biases in neural networks, shedding light on the role of weight matrices in model training. They explain how LoRA aims to optimize neural networks by focusing on specific weight adjustments, rather than the laborious process of fine-tuning the entire model. By simplifying the training process through matrix decomposition, LoRA offers a more streamlined and effective approach to model adaptation. The team's practical demonstration in Python underscores the accessibility of LoRA, making it user-friendly even for those without a deep grasp of the underlying mathematics. They highlight the versatility of LoRA in adapting models for different tasks, showcasing its ease of use and efficiency in model optimization.

In their exploration of LoRA, the NeuralNine team unveils the method's potential to revolutionize neural network optimization. By zeroing in on key matrices and leveraging the concept of low intrinsic rank, LoRA offers a more efficient and resource-friendly approach to fine-tuning models. The team's demonstration in Python showcases the practical application of LoRA, making it accessible to a wide range of users. They emphasize the scalability of LoRA, noting its effectiveness even with limited GPU resources, making it a versatile tool for model optimization across various platforms. With LoRA, the NeuralNine team opens the door to a new era of streamlined and effective neural network optimization techniques.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Fine-Tuning Local Models with LoRA in Python (Theory & Code) on Youtube

Viewer Reactions for Fine-Tuning Local Models with LoRA in Python (Theory & Code)

Positive feedback on tutorial format and content

Request for video explaining parameters and their values

Question about training on existing LoRA adapters with new data

Request for intuition on model parameters and reducing model parameters

Challenge suggestion for "Für Elise"

Related Articles

Building Stock Prediction Tool: PyTorch, Fast API, React & Warp Tutorial

NeuralNine constructs a stock prediction tool using PyTorch, Fast API, React, and Warp. The tutorial showcases training the model, building the backend, and deploying the application with Docker. Witness the power of AI in predicting stock prices with this comprehensive guide.

Exploring Arch Linux: Customization, Updates, and Troubleshooting Tips

NeuralNine explores the switch to Arch Linux for cutting-edge updates and customization, detailing the manual setup process, troubleshooting tips, and the benefits of the Arch User Repository.

Master Application Monitoring: Prometheus & Graphfana Tutorial

Learn to monitor applications professionally using Prometheus and Graphfana in Python with NeuralNine. This tutorial guides you through setting up a Flask app, tracking metrics, handling exceptions, and visualizing data. Dive into the world of application monitoring with this comprehensive guide.

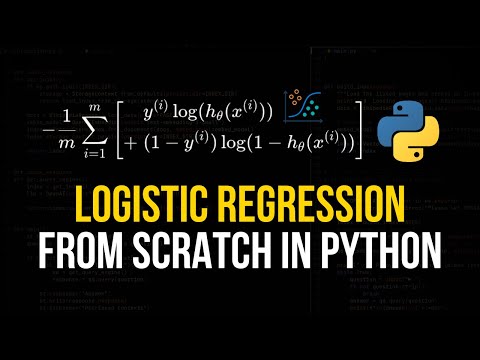

Mastering Logistic Regression: Python Implementation for Precise Class Predictions

NeuralNine explores logistic regression, a classification algorithm revealing probabilities for class indices. From parameters to sigmoid functions, dive into the mathematical depths for accurate predictions in Python.