Optimizing Looker Performance: Trim LookML for Faster System Efficiency

- Authors

- Published on

- Published on

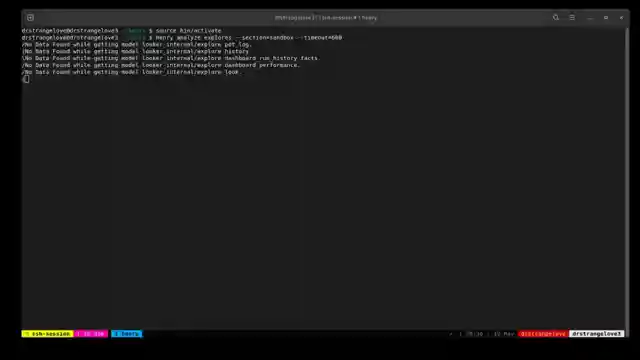

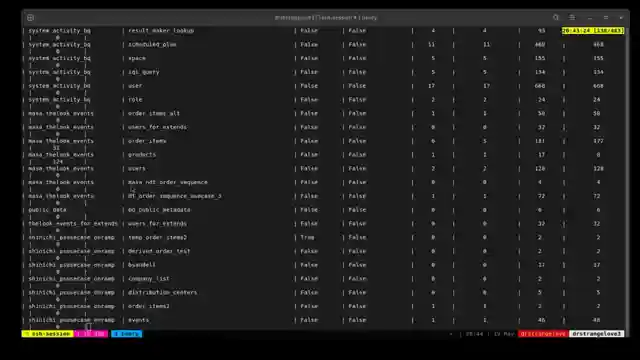

In this thrilling episode, we join the dynamic Mike DeAngelo, a Google developer extraordinaire, as he delves into the exhilarating world of Looker performance optimization alongside his trusty sidekick, Henry. With the intensity of a high-speed race, Mike takes us through the intricate process of activating a Python virtual environment and running commands to analyze explorers, ensuring peak efficiency. As the clock ticks, they uncover hidden gems of information, revealing the number of joins, fields, and queries in each explorer, shedding light on inefficiencies lurking within the system.

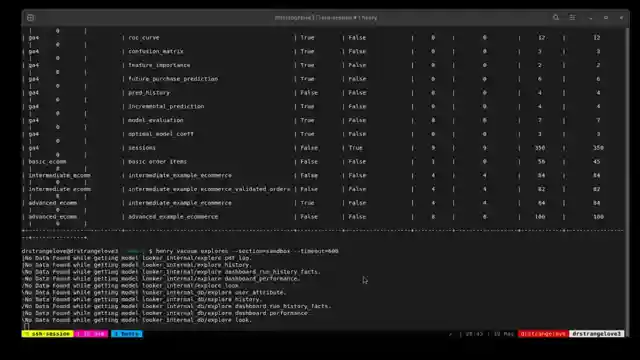

With the precision of a seasoned race car driver, Mike uncovers unused joins and fields in explorers like products and order_items, providing viewers with a front-row seat to the action-packed world of LookML trimming. The vacuuming process uncovers a staggering 8,000 unused data rows, a goldmine of inefficiencies waiting to be eliminated. Through strategic field removal and model optimization, Mike and Henry transform the bloated LookML into a lean, mean machine, ready to tackle any data challenge with speed and agility.

As the dust settles, Mike leaves viewers with a crucial lesson in system optimization - from fixing data source configurations to identifying and removing redundant fields, every step counts towards a faster, more responsive Looker instance. By following Mike and Henry's lead, viewers are equipped with the tools to streamline their own systems, ensuring peak performance and efficiency. With their expert guidance, the world of Looker optimization becomes not just a task, but a thrilling adventure waiting to be embarked upon.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Looker Performance Optimization - Episode 5 on Youtube

Viewer Reactions for Looker Performance Optimization - Episode 5

I'm sorry, but I cannot provide a summary without the specific video and channel name. If you provide me with that information, I'd be happy to help summarize the comments for you.

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.