Optimizing Generative AI: Vertex AI Evaluation Toolkit Guide

- Authors

- Published on

- Published on

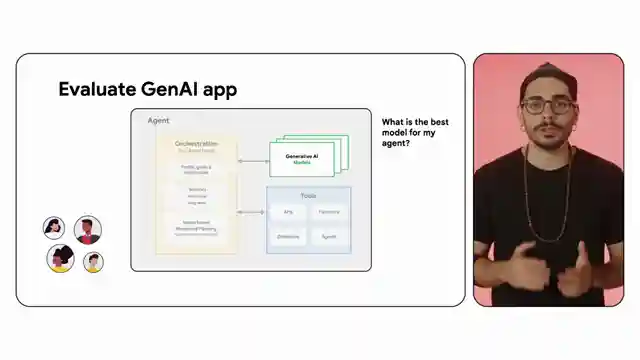

Today, the Google Cloud Tech team delves into the thrilling world of evaluating generative AI applications for reliability. They emphasize the critical aspects of model selection, tool utilization, and the analysis of real-world interaction data to ensure top-notch performance. Introducing the Vertex AI GenAI Evaluation toolkit as the ultimate weapon in this high-stakes game, offering a range of prebuilt and customizable metrics, seamless integration with Vertex AI Experiments, and a streamlined evaluation process in just three simple steps.

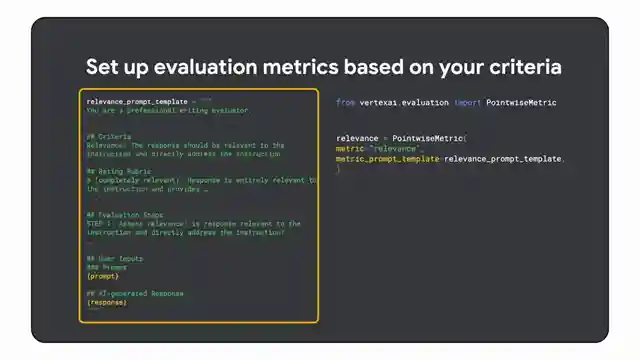

With a dramatic flair, they showcase the importance of meticulously preparing the evaluation data set, carefully crafting diverse examples, model outputs, correct answers, and tool calls to paint a vivid picture of the application's performance. Defining evaluation metrics is portrayed as a crucial step, with the team providing a quick example of a custom relevance metric tailored to evaluate a single model. They highlight the flexibility of creating custom metrics from scratch or utilizing prebuilt templates, ensuring that every aspect of the evaluation process is fine-tuned for optimal results.

The adrenaline continues to surge as they guide viewers through the process of creating an evaluation task and running the assessment on Vertex AI using the Python SDK. The simplicity of feeding data sets and chosen metrics into the evaluation task, linking it to the experiment, and running the evaluation is underscored, making the evaluation process accessible even to those new to the field. Finally, the team showcases the power of Vertex AI Experiments in visualizing and tracking evaluation results, allowing for in-depth analysis, comparison of different runs, and gaining valuable insights into the performance of generative AI applications. With Vertex AI Generative AI Evaluation, the team promises an easy access to metrics, enabling users to create and share custom reports and drive continuous improvement in their AI applications.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to evaluate your Gen AI models with Vertex AI on Youtube

Viewer Reactions for How to evaluate your Gen AI models with Vertex AI

Viewers interested in more AI explainer videos

Positive reactions with emojis like 🌺❤️🌺👍🇹🇭🇹🇭

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.