Optimize ML Workloads: Google Cloud's New Diagnostics Library & XPRO Tool

- Authors

- Published on

- Published on

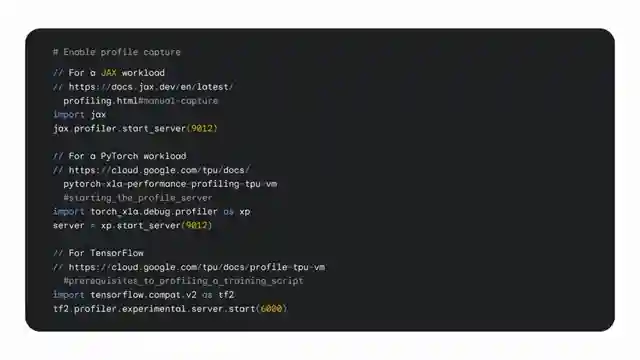

In this exhilarating episode, the Google Cloud Tech team unveils a groundbreaking cloud diagnostics profiling library and an updated XPRO tool to turbocharge ML performance on Google Cloud. Led by the dynamic duo of Reesh and Hira, these tools offer a seamless experience for ML engineers to optimize their workloads on XLA-based frameworks like Jax, PyTorch, and TensorFlow. With the Cloud Diagnostics XPRO library, users can set up a self-hosted tensorboard VM instance and capture profiles programmatically or on demand, revolutionizing the way performance is optimized in the ML realm.

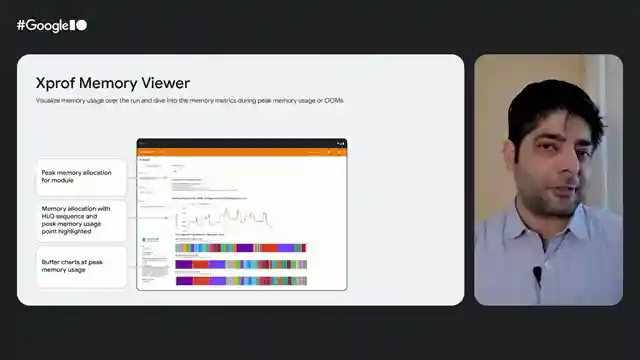

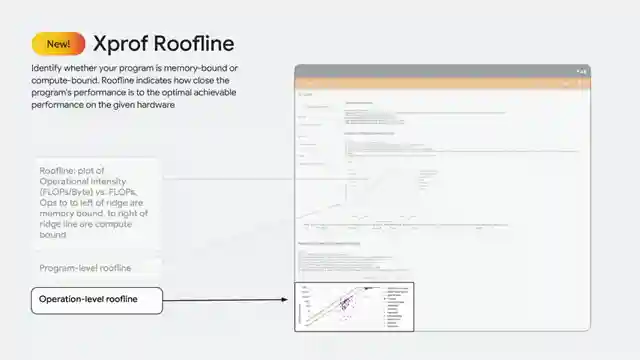

The XPRO tool, formerly known as the TensorFlow profiler, undergoes a thrilling rebranding to support all XLA-based frameworks, ensuring a consistent optimization experience across the board. From analyzing workload performance to delving into memory allocation and framework operations, the XPRO tool equips users with the arsenal needed to fine-tune their ML tasks for peak efficiency. With tools like the graph viewer, op profile, and framework ops, users can pinpoint performance bottlenecks and unleash the full potential of their ML workloads.

Through real-world scenarios on TPUs and GPUs, the team showcases the power of XPRO in action. By tweaking batch sizes and optimizing compute-to-communication ratios, they demonstrate how these tools can transform a sluggish ML task into a high-octane performance powerhouse. Whether you're a seasoned ML enthusiast or a curious newcomer, the cloud diagnostics XPRO library and XPRO tool invite you to embark on a thrilling journey of ML optimization on Google Cloud.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Unlocking ML performance secrets with insights from TensorBoard on Youtube

Viewer Reactions for Unlocking ML performance secrets with insights from TensorBoard

I'm sorry, but I cannot provide a summary without the specific video link or the channel name. Could you please provide that information?

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.