Mastering Time Series Analysis: LSTM, GRU, and Hidden States

- Authors

- Published on

- Published on

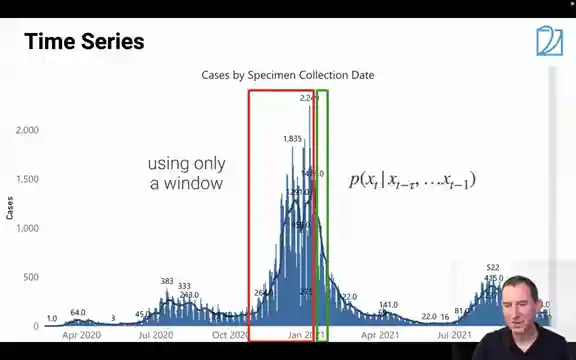

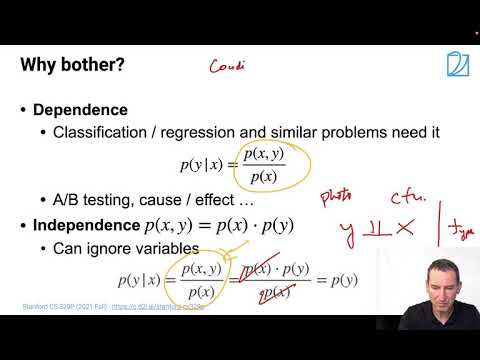

On this riveting episode of the Alex Smola channel, the team delves into the fascinating world of dependent random variables and sequence models. They draw a quirky analogy involving ducklings following each other to explain the structured dependencies in these models. Moving on to real-world applications, they dissect the statistical intricacies of modeling joint distributions in time series data, using the example of COVID-19 observations in Santa Clara County. By decomposing the joint distribution forward, they highlight the superior accuracy in predictions compared to backward methods.

The discussion progresses to the concept of short-range predictions, emphasizing the importance of leveraging recent data for more effective modeling due to temporal dependencies. Autoregressive models come into focus as a strategy to simplify long-range dependencies for enhanced predictive capabilities. Tarkin's theorem is introduced as a supporting pillar for utilizing past steps in predictions, underlining the significance of reasonable distribution assumptions and stationary data for successful modeling.

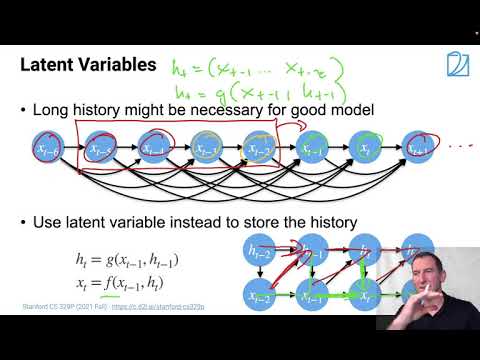

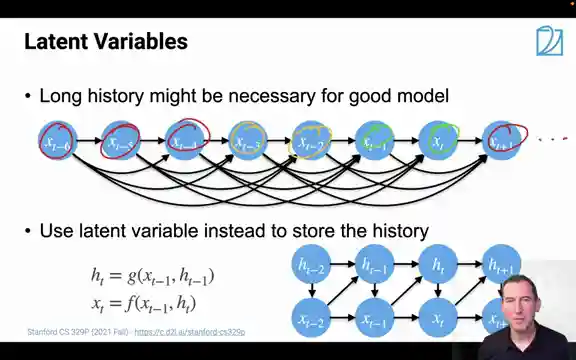

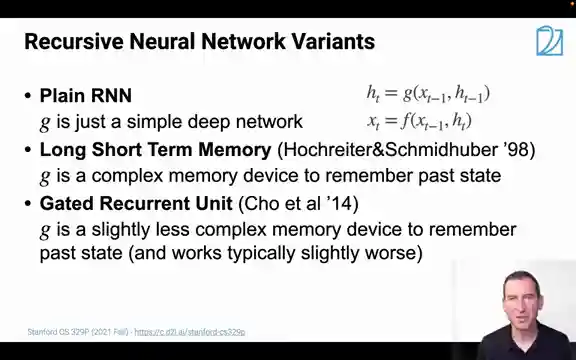

Transitioning to the realm of hidden states, the team explores how these states can streamline predictions, drawing parallels with the predictability of traffic lights. They delve into the effectiveness of latent variable models compared to autoregressive models, shedding light on the evolution of LSTM and GRU models in enhancing deep learning frameworks. By optimizing memory cell management and merging forget and input gates for faster operations, these models pave the way for more efficient and accurate predictions in complex scenarios.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Lecture 8, Part 2 Sequence Models on Youtube

Viewer Reactions for Lecture 8, Part 2 Sequence Models

I'm sorry, but I cannot provide a summary without the specific video and channel name. Could you please provide that information?

Related Articles

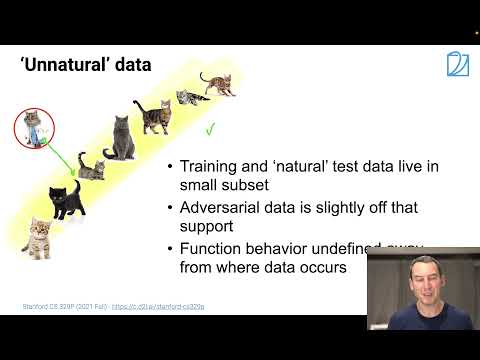

Unveiling Adversarial Data: Deception in Recognition Systems

Explore the world of adversarial data with Alex Smola's team, uncovering how subtle tweaks deceive recognition systems. Discover the power of invariances in enhancing classifier accuracy and defending against digital deception.

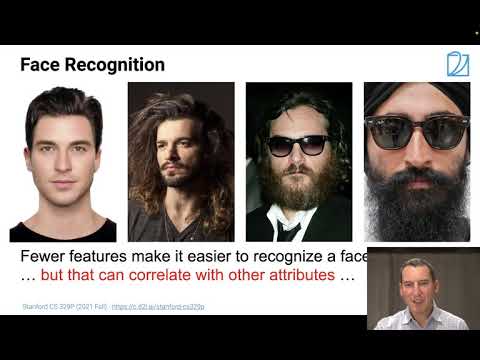

Unveiling Coverage Shift and AI Bias: Optimizing Algorithms with Generators and GANs

Explore coverage shift and AI bias in this insightful Alex Smola video. Learn about using generators, GANs, and dataset consistency to address biases and optimize algorithm performance. Exciting revelations await in this deep dive into the world of artificial intelligence.

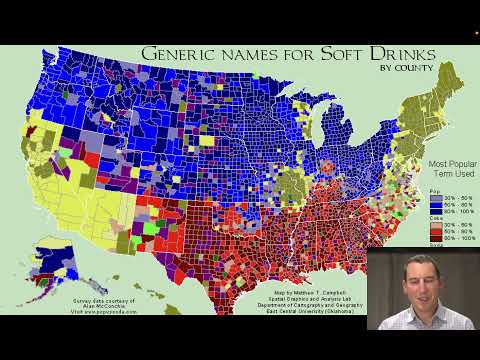

Mastering Coverage Shift in Machine Learning

Explore coverage shift in machine learning with Alex Smola. Learn how data discrepancies can derail classifiers, leading to failures in real-world applications. Discover practical solutions and pitfalls to avoid in this insightful discussion.

Mastering Random Variables: Independence, Sequences, and Graphs with Alex Smola

Explore dependent vs. independent random variables, sequence models like RNNs, and graph concepts in this dynamic Alex Smola lecture. Learn about independence tests, conditional independence, and innovative methods for assessing dependence. Dive into covariance operators and information theory for a comprehensive understanding of statistical relationships.