Mastering Jax: Turbocharge Machine Learning with NeuralNine

- Authors

- Published on

- Published on

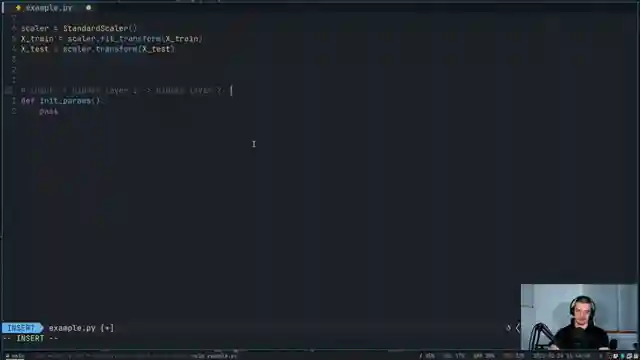

In this thrilling episode, NeuralNine delves into the world of Jax, a powerhouse tool revolutionizing machine learning training on GPUs and TPUs. Jax, with its fusion of numpy-like simplicity, just-in-time compilation, and automatic differentiation, emerges as a game-changer for high-speed deep learning. The team wastes no time, jumping into the basics of Jax, showcasing its prowess by constructing and fine-tuning a neural network on the classic iris dataset. But that's not all - they crank up the speedometer by revealing how to harness the raw power of TPUs for lightning-fast performance.

Installing Jax is a breeze, but the real magic lies in choosing the right backend for your setup, be it CPU, GPU, or TPU. Unveiling the acronym behind Jax - Just-in-Time compilation, Automatic Differentiation, and XLA Accelerated Linear Algebra - the team underscores the sheer might packed into this tool. While the video serves as a tantalizing teaser into the world of Jax, the team nudges viewers towards the documentation for a deeper dive into its multifaceted capabilities. Jax's own version of numpy, Jax numpy, offers a familiar yet distinct experience, with subtle nuances like array immutability setting it apart.

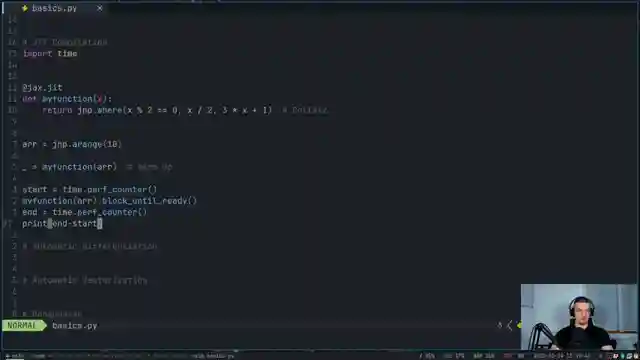

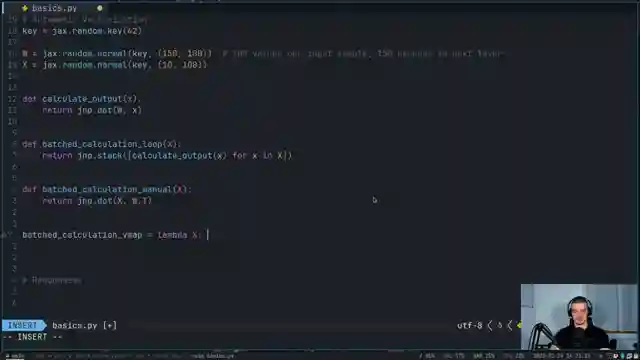

Zooming into the heart of Jax's power lies its JIT compilation, a turbo boost for code execution that slashes processing times. Automatic differentiation in Jax emerges as a hero feature, simplifying the calculus behind derivative computations for a wide array of functions. The team pulls back the curtain on the bytecode generated by JIT compilation, offering a peek into the inner workings of this speed demon. While certain functions hit a roadblock when it comes to JIT compilation, the automatic differentiation prowess in Jax smoothens out the bumps in the road, ensuring a seamless journey towards efficient model training.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch JAX Tutorial: The Lightning-Fast ML Library For Python on Youtube

Viewer Reactions for JAX Tutorial: The Lightning-Fast ML Library For Python

Request for an advanced and detailed course on JAX

Appreciation for the long-form content

Interest in more videos about JAX

Request for an advanced tutorial on JAX

Request for a playlist of ML libraries covered

Interest in a detailed tutorial

Request for a tutorial on creating a chatbot that uses web-scraped data

Inquiry on running with Huggingface models

Question about vmapping the forward function

Inquiry about customizing Pop OS appearance

Question about the code editor being used

Request for a Hindi audio track for the video.

Related Articles

Building Stock Prediction Tool: PyTorch, Fast API, React & Warp Tutorial

NeuralNine constructs a stock prediction tool using PyTorch, Fast API, React, and Warp. The tutorial showcases training the model, building the backend, and deploying the application with Docker. Witness the power of AI in predicting stock prices with this comprehensive guide.

Exploring Arch Linux: Customization, Updates, and Troubleshooting Tips

NeuralNine explores the switch to Arch Linux for cutting-edge updates and customization, detailing the manual setup process, troubleshooting tips, and the benefits of the Arch User Repository.

Master Application Monitoring: Prometheus & Graphfana Tutorial

Learn to monitor applications professionally using Prometheus and Graphfana in Python with NeuralNine. This tutorial guides you through setting up a Flask app, tracking metrics, handling exceptions, and visualizing data. Dive into the world of application monitoring with this comprehensive guide.

Mastering Logistic Regression: Python Implementation for Precise Class Predictions

NeuralNine explores logistic regression, a classification algorithm revealing probabilities for class indices. From parameters to sigmoid functions, dive into the mathematical depths for accurate predictions in Python.