Mastering Coverage Shift in Machine Learning

- Authors

- Published on

- Published on

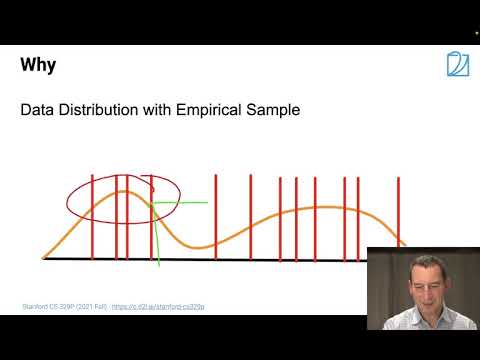

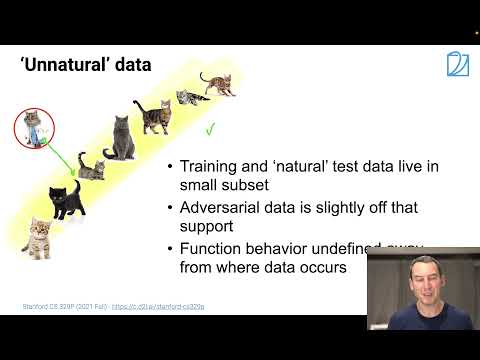

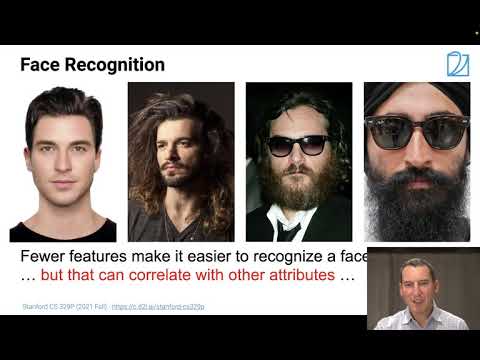

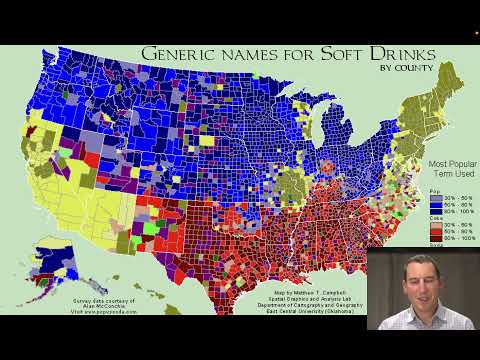

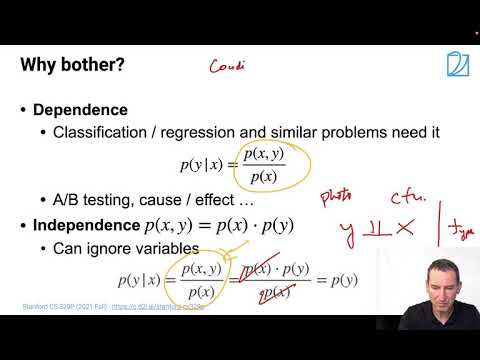

In this riveting lecture by the one and only Alex Smola, the concept of coverage shift takes center stage. Picture this: you're training a model on one dataset, but when it comes time to test it, the distributions don't quite match up. It's like expecting a pint of lager and getting a cup of tea instead. Alex breaks down the reasons behind this mismatch, from empirical data sets to adversarial challenges and good old label shifts. It's a rollercoaster of machine learning drama, folks.

Using the analogy of classifying cats and dogs, Alex paints a vivid picture of how decision boundaries evolve with more data. It's like navigating a minefield of misclassified black cats and forgotten Dobermans. The lecture doesn't hold back, showcasing real-world examples like the 56-layer network's surprising performance variations between training and test sets. It's a wild ride through the neural network jungle, with twists and turns at every layer.

But fear not, Alex doesn't leave us hanging. He delves into the nitty-gritty of empirical risk minimization versus expected risk at test time. It's like balancing on a tightrope between training accuracy and real-world performance. And let's not forget the importance of validation sets in this high-stakes game of model evaluation. Alex even throws in a trick or two, like adding noisy data variants to beef up the training set diversity. It's a masterclass in machine learning survival tactics, with Alex Smola leading the charge like a fearless explorer in the vast wilderness of data science.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Lecture 6, Part 1, Generalization on Youtube

Viewer Reactions for Lecture 6, Part 1, Generalization

Request for more lectures to be uploaded

Appreciation for the lectures

Related Articles

Unveiling Adversarial Data: Deception in Recognition Systems

Explore the world of adversarial data with Alex Smola's team, uncovering how subtle tweaks deceive recognition systems. Discover the power of invariances in enhancing classifier accuracy and defending against digital deception.

Unveiling Coverage Shift and AI Bias: Optimizing Algorithms with Generators and GANs

Explore coverage shift and AI bias in this insightful Alex Smola video. Learn about using generators, GANs, and dataset consistency to address biases and optimize algorithm performance. Exciting revelations await in this deep dive into the world of artificial intelligence.

Mastering Coverage Shift in Machine Learning

Explore coverage shift in machine learning with Alex Smola. Learn how data discrepancies can derail classifiers, leading to failures in real-world applications. Discover practical solutions and pitfalls to avoid in this insightful discussion.

Mastering Random Variables: Independence, Sequences, and Graphs with Alex Smola

Explore dependent vs. independent random variables, sequence models like RNNs, and graph concepts in this dynamic Alex Smola lecture. Learn about independence tests, conditional independence, and innovative methods for assessing dependence. Dive into covariance operators and information theory for a comprehensive understanding of statistical relationships.