Master Local LLM Deployment with Docker Model Runner

- Authors

- Published on

- Published on

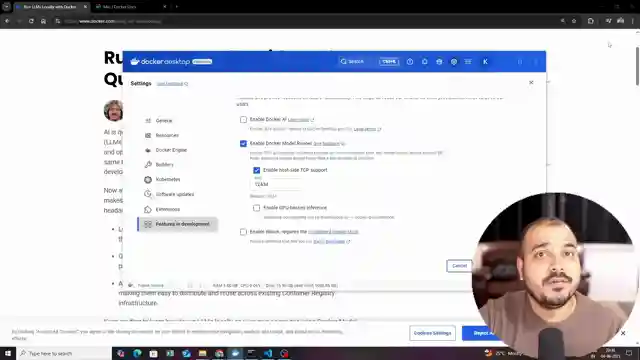

In this thrilling episode on Krish Naik's channel, we delve into the exhilarating world of running LLMs locally with Docker model runner. Picture this: developers craving the freedom to fine-tune their LLMs, conduct rigorous testing, and pit open source models against each other. The key? Docker desktop, boasting a minimum version requirement of 4.40 for the ultimate model running experience. But hold onto your seats because the real magic lies in enabling Docker model runner and host-side TCP support, unlocking a realm of possibilities right at your fingertips.

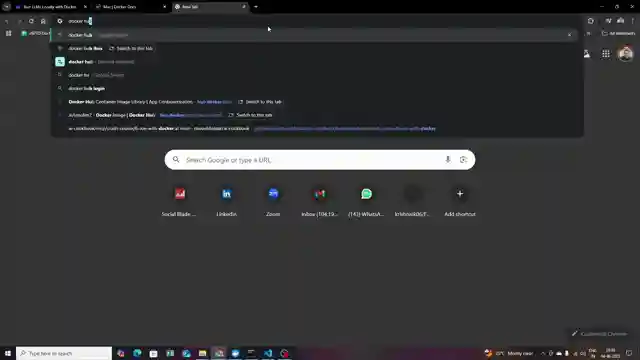

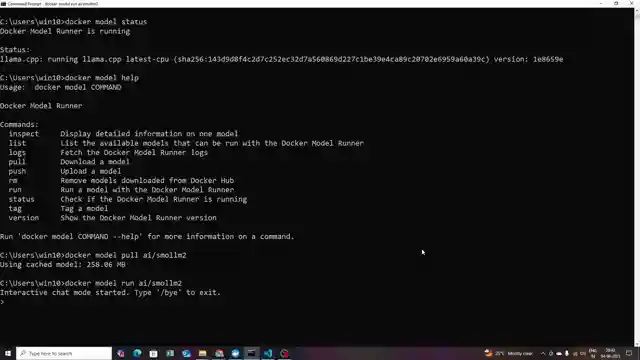

But wait, there's more! Strap in as we navigate through a command prompt journey to verify and activate the model runner, ensuring seamless operation. With a quick check on the status using "docker model status," we're all set to explore a treasure trove of commands like inspect, list, pull, push, and run. And that's just the tip of the iceberg. The adrenaline rush continues as we venture into Docker Hub, uncovering a myriad of LLM models ripe for the picking. From llama 3.2 for chat assistance to text extraction, the options are as vast as the open road ahead.

Rev up your engines as we hit the gas on pulling a model with the command "docker model pull AI/small_lnm2" and kicking it into high gear with "docker model run AI/small_lnm2." Experience the sheer thrill of engaging in dynamic conversations and extracting information with just a few keystrokes. And when it's time to bid adieu, a simple "/bye" brings the chat session to a close. But the excitement doesn't stop there. Buckle up as we take a detour into real-time interactions with the model, where facts and questions are met with lightning-fast responses, all within the confines of local operation. And the cherry on top? Seamless compatibility with the OpenAI library, bridging the gap between cutting-edge technology and user-friendly accessibility.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Run LLMs Locally With Docker Model Runner on Youtube

Viewer Reactions for Run LLMs Locally With Docker Model Runner

Compatibility with modules other than OPENAI

Request for information on the laptop used for coding and editing

Compatibility with langchain

Request for video in Hindi

Related Articles

Mastering AI Debugging: Langsmith API Keys and State Graph Creation

Join Krish Naik in exploring advanced lag graph concepts like debug and monitoring in AI applications. Learn to obtain and use langsmith API keys for effective tracking within the lang ecosystem. Master the art of state graph creation for seamless monitoring and debugging.

Mastering Generative AI and Agent Engineering Projects with Krish Naik

Join tech guru Krish Naik on a captivating exploration of generative AI and agent engineering projects. Learn about RAG chatbots, agentic RAGs, AI agents, MCP servers, and essential skills like debugging and deployment. Elevate your tech game with Krish Naik's expert insights.

Master Agentic AI with Langgraph: Crash Course in Building Chatbots

Learn to build agentic AI applications using Langgraph in a comprehensive crash course. Explore fundamental techniques, advanced concepts, and end-to-end projects to master the art of creating chatbots and deploying production-grade applications.

Mastering MCP Server Creation: Langchin, Langraph, and Transport Protocols

Learn to build MCP servers from scratch using Langchin and Langraph libraries. Explore HTDO and HTTP transport protocols for seamless communication. Krish Naik's tutorial offers invaluable insights for developers entering the MCP domain.