Llama 4 AI Model: Behemoth, Maverick, and Scout Revolutionizing Open-Source Accessibility

- Authors

- Published on

- Published on

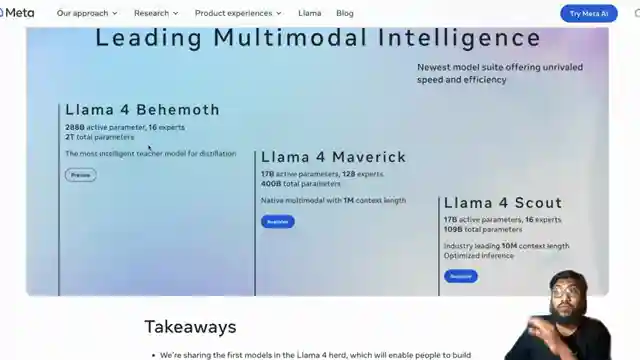

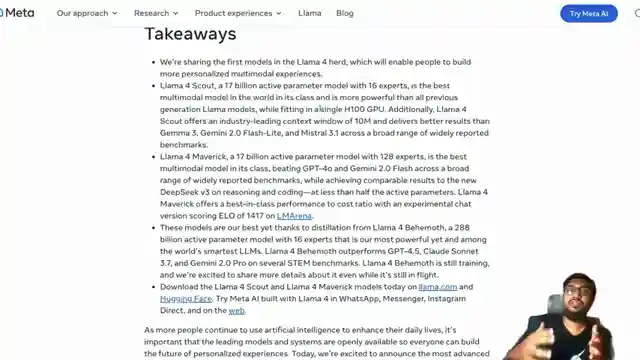

Today, on 1littlecoder, we dive into the thrilling world of AI with the arrival of Llama 4. This powerhouse model, available for download, comes in three variants: Behemoth, Maverick, and Scout. Behemoth, still in training, is already flexing its muscles by outperforming the competition. Meanwhile, Scout boasts a groundbreaking 10 million context window, setting a new standard in the industry. Maverick, with its 128 experts and 400 billion parameters, promises top-tier performance in a compact package.

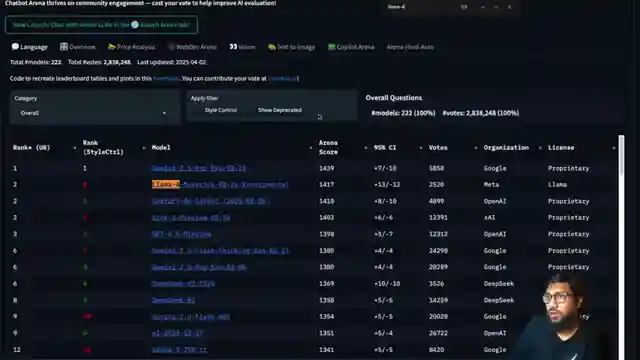

But wait, there's more! Behemoth takes the crown as the largest model in the lineup, with a mind-boggling 2 trillion parameters. This beast leaves competitors like Gemini 2.0 Pro and Claude 3.7 in the dust, showcasing its dominance in the AI arena. Despite its impressive capabilities, Llama 4 comes with a catch - a restrictive license for users with over 700 million monthly active users. This limitation has sparked controversy among enthusiasts, questioning the true essence of open-source AI.

As we delve deeper into the world of Llama 4, we uncover the intricate details of its models and their performance benchmarks. Maverick, the workhorse of the lineup, stands tall against the likes of GPT 40 and Gemini Flash 2, proving its mettle in the AI landscape. The channel sheds light on the models' cost-effectiveness, efficiency, and industry-leading performance, painting a picture of a game-changer in the AI realm. With its innovative mixture of experts approach, Llama 4 sets a new standard for AI models, promising a bright future for open-source AI accessibility.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Just in: LLAMA 4 with 10 Million Context!!! on Youtube

Viewer Reactions for Just in: LLAMA 4 with 10 Million Context!!!

Disappointment over the 700 million monthly active user limit

Comments on the 10 million context length and its implications

Speculation on the number of users for the license and the need for tier-based licenses

Concerns about the lack of public cost information for exceeding the user limit

Comparison of the context window length with other models

Skepticism about multimodality use in Llama models

Comments on the licensing agreement and its interpretation

Comparison between Gemini 2.5 Pro and Llama 4 Behemoth

Request for testing the model's ability to memorize entire contexts

General excitement and appreciation for the new model's features

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.