Alibaba's QWQ 32B: Game-Changing AI Model Challenges DeepSeek R1

- Authors

- Published on

- Published on

In the latest episode of AI Revolution, Alibaba's Quen team has unleashed a game-changer in the form of the QWQ 32B language model. This David among Goliaths packs a punch with just 32 billion parameters, yet manages to go toe-to-toe with the heavyweight DeepSeek R1. It's like watching a scrappy underdog take on the reigning champion and actually holding its ground. The real kicker? QWQ 32B can run on hardware with a mere 24 GB of VRAM, a fraction of what its competitors demand. It's like bringing a slingshot to a tank battle and coming out on top.

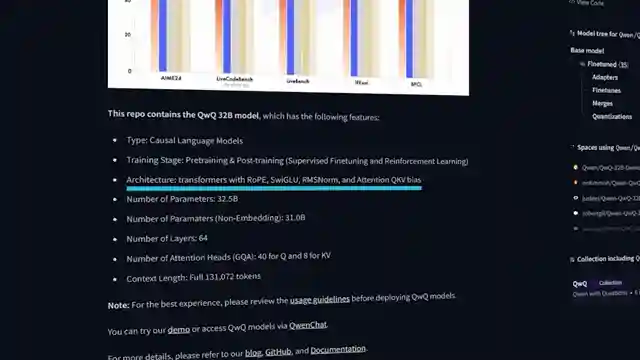

But what sets this model apart is not just its David-and-Goliath story. The Quen team has armed QWQ 32B with reinforcement learning, giving it a leg up in the realm of reasoning abilities. This isn't just about crunching numbers; it's about thinking, adapting, and problem-solving like a champ. With 64 Transformer layers and a host of other nifty features, this model is not just a one-trick pony. It's like giving a race car a turbo boost and watching it zoom past the competition.

The buzz around QWQ 32B isn't just hot air. It's backed by solid performance in benchmarks, going head-to-head with the big guns like DeepSeek R1 and holding its own. And the best part? It's open-source, meaning you can tweak it, fine-tune it, and make it your own. It's like getting a high-performance sports car and being handed the keys to customize it to your heart's content. So, buckle up, because QWQ 32B is not just a model; it's a revolution in the making.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch New Small Open Source AI Just Beat DeepSeek R1 and o1 mini Experts SHOCKED on Youtube

Viewer Reactions for New Small Open Source AI Just Beat DeepSeek R1 and o1 mini Experts SHOCKED

QwQ-32B is considered a game-changer in AI, with smaller models outperforming larger ones.

Suggestions for AI companies to have a 'Mother' AI for training smaller, more efficient models.

Questions about the accuracy of GPU specifications shown in the video.

Potential breakthroughs with distillation combined with databases.

Discussion on the capabilities of AI models in reading compressed PDF files.

Interest in ancient equation scripts for calculating space and time.

Comments on the need for better management of the increasing number of new AIs.

Curiosity about China's involvement in open source despite being considered a less open society.

Speculation on the VRAM capacity of AMD's new RX9070 GPU.

Personal anecdotes and experiences with running AI models on specific hardware setups.

Related Articles

Revolutionizing Robotics: Google DeepMind's Gemini Robotics Unleashed

Google DeepMind unveils Gemini Robotics on device, a standalone model revolutionizing robotics with offline operation, low latency, and high adaptability for real-time decision-making. AI adoption growth and economic impact predictions underscore the significance of this advancement. Gemini Robotics SDK empowers developers for efficient customization and deployment, prioritizing safety and practical impact in various industries.

Tech Update: Windows MW, Google Magenta, Similar AI, Open AI Legal Woes

Windows introduces MW micro model for lightning-fast responses; Google unveils Magenta Real Time for live music jamming; Similar's AI agent offers shared control in web browsing; Open AI's hardware deal faces trademark lawsuit but remains intact. Exciting tech updates ahead!

Nano VLLM: Revolutionizing AI with Speed and Clarity

Nano VLLM, an open-source project by AI Revolution, revolutionizes AI with fast performance and clear code. Simplifying complex AI processes, it outperforms VLLM, making AI learning accessible and inviting community contributions for future enhancements.

Revolutionize Your Workflow with Deep Agent: The Ultimate AI Tool

Deep Agent from AI Revolution is a versatile AI tool that can build websites, create presentations, produce videos, and more. With strong security measures, straightforward cost control, and continuous updates, Deep Agent offers a user-friendly and efficient solution for various tasks.