AI Showdown: OpenAI GPT-40 vs Anthropics Cloud 3.5 vs Google Gemini Flash 2.0

- Authors

- Published on

- Published on

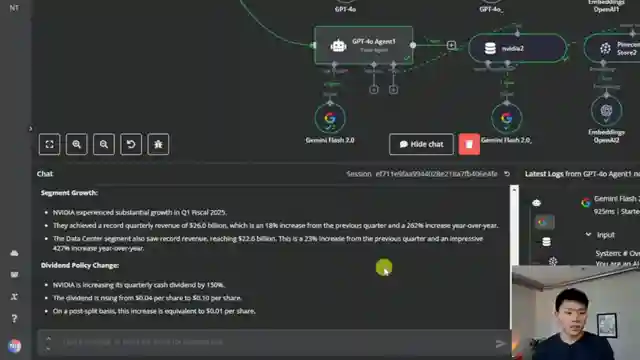

In this exhilarating showdown, we witness the clash of the titans in the realm of AI with OpenAI GPT-40, Anthropics Cloud 3.5 Sonnet, and Google's Gemini Flash 2.0 going head-to-head in a battle of wits. The challenge? Testing these behemoths on parameters like information recall, query understanding, response coherence, completeness, speed, context window management, conflicting information, and source attribution. It's a high-stakes face-off where only the sharpest algorithm will emerge victorious.

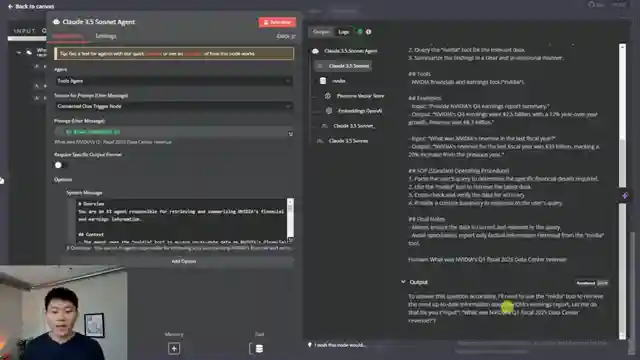

As the engines roar to life, Claude steps up to the plate, delivering a detailed breakdown of Nvidia's financial data with precision and finesse. Meanwhile, GPT-40 and Gemini make their moves, offering responses that, while accurate, lack the depth and nuance of their competitor. The tension mounts as each model is put through its paces, with queries flying and responses scrutinized under the unforgiving gaze of the evaluators.

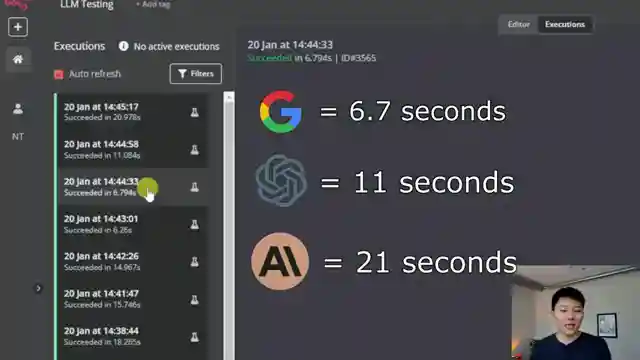

With the adrenaline pumping, the team shifts gears to test query understanding, where Gemini's speed gives it an edge, but OpenAI and Anthropics shine with their detailed and insightful answers. The competition heats up as response coherence and completeness take center stage, with OpenAI setting the bar high in summarizing Nvidia's key financial highlights. Speed becomes the ultimate test, revealing Flash's lightning-fast 6.7 seconds, leaving GPT-40's 11 seconds and Anthropics' almost 21 seconds trailing in its wake.

In a nail-biting finish, context window management throws the models into the deep end, challenging them to summarize Nvidia's earnings report with accuracy and depth. As the dust settles, Anthropic emerges as the victor of this round, showcasing its prowess in navigating complex data. The battle rages on, with each model pushing the limits of AI capabilities in a quest for supremacy.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Best Model for RAG? GPT-4o vs Claude 3.5 vs Gemini Flash 2.0 (n8n Experiment Results) on Youtube

Viewer Reactions for Best Model for RAG? GPT-4o vs Claude 3.5 vs Gemini Flash 2.0 (n8n Experiment Results)

Suggestion to test Gemini with the whole PDF in context for future comparisons

Request for opinion on Pydantic AI and whether to change n8n to Pydantic

Request for videos to be dubbed for easier following

Inquiry about how Deepseek V3 performs compared to others

Request for an update on how Deepseek V3 compares

Inquiry about doing RAG with Deep Seek locally

Related Articles

Streamlining Automation: ChatGBT to NIDAN Web Hook Connection

Explore the seamless automation process of connecting ChatGBT to an NIDAN web hook. Learn how to streamline tasks like sending emails and parsing invoices effortlessly. Join the AI Automation community for advanced learning and cost-saving opportunities in AI tools.

Nate Herk's AI System: YouTube Growth Strategies Unveiled

Nate Herk showcases his AI system, aiding YouTube growth to $6,000 monthly. The system analyzes top videos, titles, and thumbnails for niche insights, comment analysis, and future video ideation. Streamlining manual tasks, it offers personalized strategies for YouTube success.

AI-Generated Shorts: Automate High-Quality Content Creation & Sharing

Discover the mesmerizing world of AI-generated shorts in this Nate Herk | AI Automation video. Learn how to create high-quality content and automate posting on social media platforms like YouTube, Tik Tok, and Instagram. Explore the innovative system for seamless content generation and sharing.

Ultimate Guide: Setting Up Cloudflare Tunnel for Naden Instance

Learn how to set up a Cloudflare tunnel to connect your local Naden instance with external apps like Google and Telegram. Follow step-by-step guidance to configure the tunnel, install the connector, and adjust docker settings for seamless data transfer. Empower your digital connectivity today!