Accelerator Obtainability Options for AML Workloads on GKE

- Authors

- Published on

- Published on

In this riveting episode by Google Cloud Tech, they delve into the thrilling world of accelerator obtainability options for AML workloads on GKE. The team, led by the dynamic duo of Mofi and Miko Jalinski, uncovers the challenges users face in securing cutting-edge hardware like TPUs and GPUs. From the intense competition among hardware vendors to the hefty bills users must foot, the quest for these limited resources is nothing short of a high-octane race against time and cost.

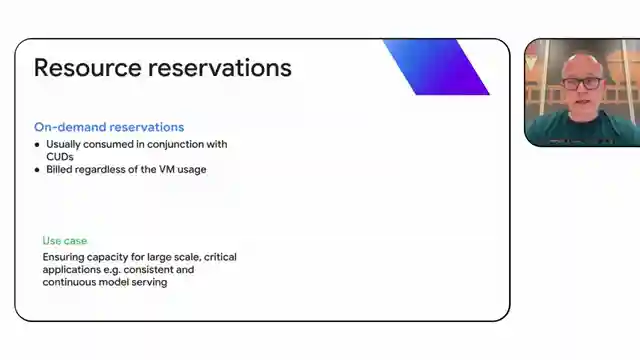

The adrenaline-fueled discussion introduces viewers to the heart-pounding choices between on-demand and spot options, each offering a unique set of risks and rewards. From the safety of full control with on-demand to the wild ride of price flexibility with spot, users must navigate these treacherous waters to optimize their cloud bill without sacrificing performance. Enter the world of reservations, where seasoned AI companies can stake their claim on future resources with strategic precision, ensuring a steady supply for critical applications and large-scale operations.

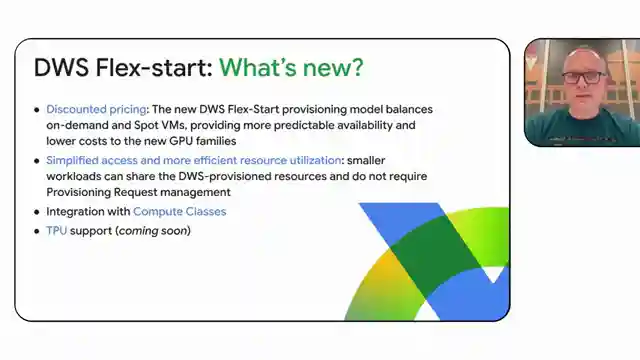

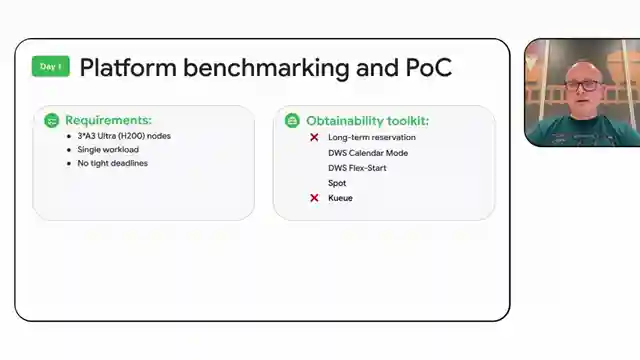

But the excitement doesn't stop there. Google Cloud Tech unveils the future reservations feature, a game-changer that empowers users to define their resource needs and secure them in advance, even for the latest and greatest accelerators like A3 ultra machines. The team's innovative approach doesn't end with reservations; they introduce DWS flexart, a dynamic mode that enhances accelerator obtainability without the constraints of reservations. With discounted pricing, integration with compute classes, and support for TPUs on the horizon, users are in for a pulse-pounding ride through the fast-paced world of AML workloads on GKE.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Improve GPU/TPU Obtainability with DWS Flex Start on GKE on Youtube

Viewer Reactions for Improve GPU/TPU Obtainability with DWS Flex Start on GKE

I'm sorry, but I cannot provide a summary without the video or context.

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.